---

title: Virtual Machines (VMs)

source_url:

html: https://docs.nirvanalabs.io/cloud/compute/vms/

md: https://docs.nirvanalabs.io/cloud/compute/vms/index.md

---

Virtual Machines (VMs) serve as the backbone of Nirvana Cloud, offering high-level performance and flexibility required to run a wide array of applications and workloads. Capitalising on our hyper-converged infrastructure (HCI), Nirvana Cloud's VMs are designed to operate on bare metal servers, thereby providing an abstraction layer that separates the user's applications from the underlying hardware.

## Features

### Creation and Management

Nirvana Cloud allows users to create and manage VMs effortlessly using its intuitive dashboard interface. These VMs impersonate hardware conditions and operate independently from the underlying physical machinery.

### Resource Allocation

Nirvana Cloud guarantees unhindered access to crucial resources such as compute, memory, storage, and networking for the VMs. These allocations can be adjusted based on the unique requirements of different applications.

### CPU & RAM Performance

Thanks to its ability to bypass unnecessary virtualization latencies and provide direct hardware access, Nirvana Cloud ensures top-notch performance from its VMs. This performance, marked by reduced latency and limited resource contention, is ideal for resource-intensive workloads such as data analytics, machine learning, and high-performance databases.

### Instance Customization

VMs in Nirvana Cloud can be customized to the user's needs, with the ability to modify key attributes such as CPU cores, memory, storage volume, and network configurations. This level of customization provides users with the opportunity to fine-tune their VMs to suit specific operational needs.

### VM Security & Isolation

Nirvana Cloud VMs operate in isolation from each other, providing robust security and privacy. Users gain full access to the VM's entire operating system and any user processes occur exclusively within the VM. Nirvana Cloud's tasks run beneath the VM layer, within the hypervisor. This setup, combined with robust network protocols, bolsters security and protects critical data and applications.

Nirvana Cloud's VMs are versatile and cater to a broad range of use cases across on-premises and cloud environments. Setting up a VM is a straightforward process—simply follow Nirvana Cloud's setup guide to get started.

---

title: CPU and Memory

source_url:

html: https://docs.nirvanalabs.io/cloud/compute/vms/cpu-and-ram/

md: https://docs.nirvanalabs.io/cloud/compute/vms/cpu-and-ram/index.md

---

Nirvana Cloud supports VMs using any combination of CPU, RAM, and storage. You are not limited to preset sizing options, allowing you to design virtual machines that align precisely with your application requirements. By adjusting the compute, memory, and storage sliders, you can easily customize the size of your virtual machine.

| | |

| :----------------------- | :----------------------------------------------------------------------------------------------------------------------------------------------- |

| **Unique Configuration** | Fully customizable VM sizes, ensuring each VM is tailored to individual project requirements. |

| **User Interface** | Users can effortlessly adjust compute, memory, and storage parameters using intuitive sliders, providing a user-friendly experience. |

| **Efficient Scaling** | As your project grows or needs change, seamlessly alter configurations without complex migrations or setups. |

| **Optimization** | By tailoring resources precisely to requirements, you can eliminate wastage and ensure cost-effectiveness, maximizing your return on investment. |

# E7 VM Specs

## CPU Type and Specs

Nirvana Cloud utilizes the AMD EPYC 7513 chipset for its bare metal servers, offering impressive performance capabilities. The CPUs operate at clock speeds of up to 3.65 GHz, ensuring rapid data processing and application responsiveness. AMD EPYC 7513 is a server/workstation processor with 32 cores, operating at 2.6 GHz by default. The use of AMD Simultaneous Multithreading (SMT) effectively doubles the core-count to 64 threads for superior performance in various workloads, making it a top choice for diverse computing tasks.

## RAM Type and Specs

Nirvana Cloud is specifically tailored for applications, such as blockchain data indexers and DeFi platforms, that demand significant RAM capacities for optimal performance. With the E7 virtual machines powered by the AMD EPYC 7003 series chipset, users benefit from efficient RAM utilization tailored for Web3 workloads. Nirvana Cloud's adaptive scalability allows users to seamlessly transition between different RAM configurations - from 8 GBs to 224 GBs - without the hassle of over-provisioning. Additionally, users can easily adjust RAM allocations directly from the VM details page, streamlining optimization and ensuring peak performance.

---

title: FAQ

source_url:

html: https://docs.nirvanalabs.io/cloud/compute/vms/faq/

md: https://docs.nirvanalabs.io/cloud/compute/vms/faq/index.md

---

---

title: Monitoring

source_url:

html: https://docs.nirvanalabs.io/cloud/compute/vms/monitoring/

md: https://docs.nirvanalabs.io/cloud/compute/vms/monitoring/index.md

---

## Performance and Utilization Metrics

When viewing the details of your virtual machine, you can access comprehensive performance and utilization metrics. This includes CPU utilization, RAM utilization, storage utilization, bandwidth ingress, and egress. Monitoring these metrics provides valuable insights into resource utilization and aids in optimizing the performance of your applications.

Nirvana Cloud provides visibility into the associated resources of your virtual machine. You can identify the Virtual Private Cloud (VPC) and storage volumes linked to your VM. You can obtain in-depth information about these resources by accessing the VPC or storage volume details, facilitating efficient navigation and management within the Nirvana Cloud platform.

Note: For more detailed technical documentation and instructions, refer to the official Nirvana Cloud documentation, which provides step-by-step guidance on deploying and managing virtual machines.

## BTOP

BTOP is a feature-rich and high-performance system monitoring tool that offers users a real-time glimpse into various system metrics, including CPU, memory, and network statistics. With its intuitive and visually appealing interface, users can effortlessly navigate and drill down into detailed statistics, ensuring a comprehensive understanding of their system's performance.

BTOP comes pre-installed on Nirvana Cloud VMs, allowing users to start monitoring without any additional setup. For those looking to dive deeper into its capabilities or seeking updates, the official repository is readily available at BTOP on GitHub.

---

title: OS Images

source_url:

html: https://docs.nirvanalabs.io/cloud/compute/vms/os-images/

md: https://docs.nirvanalabs.io/cloud/compute/vms/os-images/index.md

---

Nirvana Cloud currently supports Linux Ubuntu for server creation, ensuring seamless integration and deployment. Recognized as the most popular OS choices within Web2 and Web3, they guarantee stability and robust performance for diverse applications.

For the latest versions, please visit the [Dashboard](https://dashboard.nirvanalabs.io) or query the [API](https://docs.nirvanalabs.io/api/resources/compute/subresources/vms/subresources/os_images/methods/list/).

---

title: Volumes

source_url:

html: https://docs.nirvanalabs.io/cloud/compute/volumes/

md: https://docs.nirvanalabs.io/cloud/compute/volumes/index.md

---

## Boot Volumes

Nirvana storage volumes are engineered to deliver significantly higher performance than similar elastic block storage solutions. The boot volume forms the foundation of the operating system in your Virtual Machine. It is a dedicated storage unit where the bootable operating system, system files, and data needed during the system start-up process are stored. Just like a physical computer's hard drive stores the essential files, the boot volume serves the same purpose in a VM.

Nirvana Cloud's boot volumes are designed to handle high-intensity input/output operations and deliver superior performance. It ensures that even demanding applications boot up effortlessly and function smoothly.

## Data Volumes

Beyond the boot volume, a VM's storage capacity can be expanded by utilizing multiple data volumes.

Depending on the VM's operational needs, multiple data volumes can be added to or removed from a VM in order to increase or decrease the amount of storage.

## Common Use Cases for Multi-Volume Deployments

* Separating the boot volume from the data volume for better performance and easier management.

* Adding additional volumes to a VM for more storage space.

* Utilizing raid configurations for better performance and redundancy.

* Creating separate volumes for different applications or services running on the VM.

* Storing blockchain snapshots temporarily on a second volume and removing that volume after the snapshot extraction is complete.

## Things to Keep in Mind

* Volumes can only be expanded, not shrunk. Make sure to back up any important data before expanding a volume to avoid data loss.

* When adding or removing a volume from a VM, the VM will be stopped while the volume is being added or removed and will be started again once the add/remove operation is complete.

* Make sure to unmount the volume and update the fstab file before removing a volume to avoid boot issues.

* After expanding a volume, make sure to resize the filesystem on the volume to make use of the additional space.

---

title: FAQ

source_url:

html: https://docs.nirvanalabs.io/cloud/compute/volumes/faq/

md: https://docs.nirvanalabs.io/cloud/compute/volumes/faq/index.md

---

---

title: Accelerated Block Storage (ABS)

source_url:

html: https://docs.nirvanalabs.io/cloud/compute/volumes/storage-types/abs/

md: https://docs.nirvanalabs.io/cloud/compute/volumes/storage-types/abs/index.md

---

Accelerated Block Storage (ABS) is a high-performance, crypto-tuned block storage layer built for always-hot blockchain data with sustained IOPS, delivering high throughput, high availability, and cost-effective storage for I/O-intensive workloads.

ABS combines cloud elasticity with bare-metal performance, staying fast under sustained load without throttling, burst credits, or surprise bills.

## Overview

Traditional cloud storage was designed for spiky, bursty workloads. Blockchain workloads are different: blocks keep coming, generating continuous I/O for hours or days. Indexers, archives, and trace-heavy nodes stay hot under constant load with no recovery window.

ABS is engineered to stay fast under continuous load. It delivers sustained IOPS indefinitely without time-based throttling, performance cliffs, or gradual degradation.

## Availability

ABS is currently available in the **us-sva-2 (Silicon Valley)** region and being rolled out to all new regions.

## Performance Specifications

| | |

| :--------------------------- | :-------------------------------------------------------------------------------------------------- |

| **IOPS** | 20K baseline (guaranteed) with up to 600K burst capacity |

| **Latency** | Sub-millisecond (~0.3 to 1.5 ms) |

| **Throughput** | Up to 1.6 GB/s writes, 260-380 MB/s sustained, ~600 MB/s reads |

| **Volume Size** | 32 GB to 4 PB |

| **Queue Depth** | Near zero under sustained load |

## Key Features

| | |

| :--------------------------------- | :--------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| **Sustained Performance** | No throttling after 2-3 hours like traditional cloud. Stays fast as long as your workload stays hot. |

| **High Availability** | Persistent shared volumes decoupled from compute. Data is never tied to a specific server. If a machine fails, workloads migrate instantly without rebuilds or data loss. |

| **Elastic Scale** | Scale from 32 GB to 4 PB with zero downtime. No hardware changes or re-provisioning required. |

| **Predictable Pricing** | Flat, TB-based pricing ($93.5/TB) with IOPS and throughput included. No burst credits, no metering. |

| **Node-Level Colocation** | Tightest physical data path when paired with Nirvana bare-metal compute for ultra-low latency. |

## Cost Comparison

ABS delivers significant cost savings compared to traditional cloud storage:

* **20x faster than gp3** and 60%+ cheaper

* **1.5x faster than io2** and 80%+ cheaper

## Use Cases

ABS is purpose-built for workloads that require sustained I/O performance:

* **RPC Nodes**: Archive and trace-heavy nodes that stay hot under constant load

* **Indexers**: Continuous indexing workloads with heavy write patterns

* **Databases**: ClickHouse, Postgres, and OpenSearch clusters requiring consistent performance

* **MPC Engines**: Multi-party computation workloads with deterministic latency requirements

* **Rollups**: High-throughput rollup infrastructure

* **Observability & Analytics**: Data-intensive pipelines and ETL workloads

* **Multi-Chain Data Platforms**: Large archives and multi-chain datasets

## Getting Started

ABS is available today for teams running I/O-intensive workloads. [Contact us](https://nirvanalabs.io/contact) to start a POC.

## Known Issues

Expanding Volumes

Currently, after creating an ABS Volume (via VM creation or independently) it cannot be expanded automatically. The Volume will expand but it won't be registered in the OS correctly and thus the additional space will not be immediately usable.

As a temporary workaround, please reach out to the Nirvana team in Slack and we will carry out the Volume expansion.

## FAQ

What is Nirvana ABS?

ABS is Accelerated Block Storage. Our high-ops, low-latency block storage solution available in our generation 2 data center region `us-sva-2`.

Does `us-sva-2` support only ABS?

Yes - `us-sva-2` **only** supports our new Nirvana ABS Block Storage. We may in future introduce more options but for now only ABS is supported for both `boot` and `data` volumes.

Which regions is ABS available in?

ABS will be rolled out and available in all next-generation sites in the coming months.

What is the maximum size of a volume for Nirvana ABS?

ABS can support volumes up to 4 PB. Today customers can provision up to 100 TB automatically and should reach out to the Nirvana team if they want to provision larger volumes.

How do I use Nirvana ABS with the Terraform Provider?

Using the [Nirvana Terraform Provider](https://registry.terraform.io/providers/nirvana-labs/nirvana/latest), set the volume type to `abs`.

For separately managed `nirvana_compute_volume` resources:

```hcl

resource "nirvana_compute_volume" "example_compute_volume" {

name = "my-data-volume"

type = "abs"

size = 100

vm_id = nirvana_compute_vm.id

}

```

For `nirvana_compute_vm` with nested `boot_volume` and `data_volumes` fields:

```hcl

resource "nirvana_compute_vm" "vm" {

region = var.region

name = "my-vm"

os_image_name = data.nirvana_compute_vm_os_images.vm_os_images.items[0].name

ssh_key = {

public_key = var.ssh_public_key

}

cpu_config = {

vcpu = var.cpu

}

memory_config = {

size = var.memory

}

boot_volume = {

size = var.boot_disk_size

type = "abs"

}

data_volumes = [

{

name = "my-data-volume"

size = var.data_disk_size

type = "abs"

}

]

subnet_id = nirvana_networking_vpc.vpc.subnet.id

public_ip_enabled = true

tags = var.tags

}

```

What filesystems work best with Nirvana ABS?

All filesystems work with ABS but we recommend avoiding those with compression as this is handled at hardware level and may introduce additional latencies.

---

title: Local NVMe

source_url:

html: https://docs.nirvanalabs.io/cloud/compute/volumes/storage-types/local-nvme/

md: https://docs.nirvanalabs.io/cloud/compute/volumes/storage-types/local-nvme/index.md

---

Local NVMe storage is directly attached to your VM, delivering the highest possible performance for workloads that benefit from physical proximity to compute.

NVMe (Non-Volatile Memory Express) is a communications interface and driver that leverages the high bandwidth of PCIe. Crafted to elevate performance, efficiency, and interoperability, NVMe is the industry standard for SSDs.

## Overview

Local NVMe drives are physically attached to the host machine, providing direct access without network overhead. This architecture delivers the lowest possible latency and highest throughput for I/O-intensive workloads.

Unlike network-attached storage, Local NVMe storage is ephemeral and tied to the physical host. If the VM is stopped or the host fails, data on local NVMe drives may be lost. For persistent storage needs, consider [Accelerated Block Storage (ABS)](/cloud/compute/volumes/storage-types/abs).

## Availability

Local NVMe is available in the following regions:

- us-sea-1 (Seattle)

- us-sva-1 (Silicon Valley)

- us-chi-1 (Chicago)

- us-wdc-1 (Washington DC)

- eu-frk-1 (Frankfurt)

- ap-sin-1 (Singapore)

- ap-seo-1 (Seoul)

- ap-tyo-1 (Tokyo)

## Key Features

| | |

| :--------------------------------- | :--------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| **Lowest Latency** | Direct PCIe connection to the CPU eliminates network hops, delivering sub-100 microsecond latency. |

| **Maximum Throughput** | NVMe-based block storage can reach a theoretical maximum speed of 1,000,000 IOPS. |

| **Cost-Efficiency** | Nirvana's NVMe-based block storage balances speed and cost, providing a significant performance boost and up to 100x lower costs. |

| **Flexibility and Compatibility** | NVMe drives are compatible with all major operating systems, and their direct communication with the system CPU ensures rapid speeds. |

## Use Cases

Local NVMe is ideal for workloads that prioritize performance over persistence:

* **Boot Volumes**: Fast startup times for VMs

* **Scratch Space**: Temporary storage for data processing and computation

* **Caching Layers**: High-speed cache for frequently accessed data

* **Workloads with External Persistence**: Applications that replicate data to external storage or other nodes

* **Low-Latency Applications**: Workloads requiring the absolute lowest possible latency

## FAQ

Is Local NVMe data persistent?

Yes, however, storage is tied to the physical host, so if the host fails, data on local NVMe drives may be lost. For highly available persistent storage, use [Accelerated Block Storage (ABS)](/cloud/compute/volumes/storage-types/abs).

When should I use Local NVMe vs ABS?

Use **Local NVMe** when you need the absolute lowest latency and highest throughput, and your application can tolerate data loss (e.g., caching, scratch space, or workloads with external replication).

Use **ABS** when you need persistent, highly available storage that survives VM restarts and host failures.

Which regions support Local NVMe?

Local NVMe is available in: us-sea-1, us-sva-1, us-chi-1, us-wdc-1, eu-frk-1, ap-sin-1, ap-seo-1, and ap-tyo-1.

How do I use Local NVMe with the Terraform Provider?

Using the [Nirvana Terraform Provider](https://registry.terraform.io/providers/nirvana-labs/nirvana/latest), set the volume type to `nvme`.

For separately managed `nirvana_compute_volume` resources:

```hcl

resource "nirvana_compute_volume" "example_compute_volume" {

name = "my-data-volume"

type = "nvme"

size = 100

vm_id = nirvana_compute_vm.id

}

```

For `nirvana_compute_vm` with nested `boot_volume` and `data_volumes` fields:

```hcl

resource "nirvana_compute_vm" "vm" {

region = var.region

name = "my-vm"

os_image_name = data.nirvana_compute_vm_os_images.vm_os_images.items[0].name

ssh_key = {

public_key = var.ssh_public_key

}

cpu_config = {

vcpu = var.cpu

}

memory_config = {

size = var.memory

}

boot_volume = {

size = var.boot_disk_size

type = "nvme"

}

data_volumes = [

{

name = "my-data-volume"

size = var.data_disk_size

type = "nvme"

}

]

subnet_id = nirvana_networking_vpc.vpc.subnet.id

public_ip_enabled = true

tags = var.tags

}

```

---

title: Introduction to Nirvana Cloud

source_url:

html: https://docs.nirvanalabs.io/cloud/introduction/

md: https://docs.nirvanalabs.io/cloud/introduction/index.md

---

Nirvana Cloud is a Web3-native cloud provider designed to reduce cloud centralization and promote a decentralized infrastructure, especially in underserved regions. The platform is optimized for efficient data processing, tailored specifically for Web3 workloads. Utilizing selected CPUs optimized for rapid cryptographic calculations, Nirvana Cloud achieves higher transactions per second, ensuring rapid data processing and maximizing throughput for demanding applications.

Nirvana Cloud is engineered specifically for high-performance web3 applications. Developed by the Nirvana Labs team, its unique features include an ultra-lightweight hypervisor and an advanced software-defined networking layer. Unlike traditional cloud providers, Nirvana Cloud's innovative approach positions it at the forefront of both Web2 and Web3 sectors.

Nirvana Cloud stands at the forefront of this paradigm shift, championing infrastructure decentralization. Our model sets a new standard by replacing massive centralized data centers with a globally distributed network of Points of Presence (PoPs). This strategic alignment with top-tier data center providers worldwide ensures genuine infrastructure diversity.

## Data center Diversity

Our decentralized approach offers multiple advantages:

• **Resilience**: A decentralized model curtails the risk of widespread failures. Localized disruptions remain isolated, safeguarding system integrity.\

• **Performance**: With global PoPs, user requests are processed closer to their origin, slashing latency and delivering a stellar user experience.\

• **Redundancy**: Our infrastructure dynamically reroutes traffic based on data center metrics, ensuring seamless service continuity.

This strategy aligns with the fundamental concepts of decentralization, taking them a step further. Nirvana Cloud not only decentralizes the software and platform aspects but also extends this decentralization to the physical infrastructure, thereby strengthening user confidence.

## Web3 vs. Traditional Cloud Providers

Web3's revolutionary shift towards decentralization clashes with the centralized anchors of traditional Web2 cloud giants. These behemoths often eclipse the core values web3 stands for. A glaring example is the alarming reliance of Ethereum nodes on centralized cloud services, posing operational risks and potential biases. To ensure Web3 achieves its transformative potential, a shift to cloud solutions resonating with its decentralized spirit is paramount.

### Performance, Scalability, and Reliability Tailored for Web3

Nirvana Cloud is meticulously crafted to cater to Web3's unique demands:

• **Computational Power**: Our infrastructure boasts CPUs with unmatched clock speeds, ensuring top-tier performance for cryptographic operations.\

• **Memory Operations:** Our focus on low-latency RAM ensures seamless smart contract functionalities and interactions with the global state.\

• **Storage Efficiency**: With NVMe SSDs at the helm, our storage solutions are primed for the burgeoning datasets of blockchains.\

• **Networking**: Our P2P-centric networking stack ensures high-speed data transmissions, striking the ideal balance between latency and throughput.

At the core of Nirvana Cloud is a commitment to reliability. We ensure unwavering service availability with redundant systems, failover protocols, and best-in-class backup solutions, setting a new gold standard in the Web3 cloud domain.

---

title: What are the advantages of Nirvana Cloud?

source_url:

html: https://docs.nirvanalabs.io/cloud/introduction/advantages/

md: https://docs.nirvanalabs.io/cloud/introduction/advantages/index.md

---

Nirvana Cloud's architecture is designed to meet the needs of high-performance Web3 workloads and traditional Web2 environments. The hardware stack behind Nirvana Cloud is optimized for rapid data processing and maximum throughput, offering several considerable advantages over traditional cloud platforms:

## Low Latency, High Throughput

Nirvana Cloud's architecture is designed to minimize overheads, ensuring rapid data processing for demanding applications. Our optimized hardware stack, specifically tailored for Web3 workloads, includes handpicked CPU options that excel at solving complex cryptographic equations quickly. This investment reflects our commitment to delivering an infrastructure optimized for rapid data processing and high transaction throughput.

## Bandwidth Cost Optimization

A simplified software-defined networking stack allows Nirvana Cloud to streamline data routing, reducing redundant transfers and maximizing bandwidth utilization. Through key relationships and negotiations with carrier network providers, we offer a competitive unit cost, surpassing what traditional cloud providers typically offer, resulting in substantial cost savings for our users.

## Minimal Virtualization

Leveraging the power of minimal virtualization, Nirvana Cloud prioritizes direct hardware access. By minimizing the layers of virtualization, we ensure that applications experience reduced storage latency and can harness near-native performance from the underlying hardware. This design allows us to support an infrastructure where data processing is swift, storage responsiveness is immediate, and overall system performance is maximized.

## CPU & RAM Scalability

Nirvana Cloud's platform features a bare metal hypervisor design that prioritizes adaptability and scalability. Applications can adjust resources in real time, ensuring consistent performance. Users can create virtual machines with any combination of CPUs, RAM, and Storage, eliminating the issue of underutilized or overutilized resources commonly encountered with other cloud providers.

## Resilience & Reliability

Nirvana Cloud prioritizes high application uptime and performance. Our infrastructure incorporates time-tested open-source technologies, ensuring stability and optimal performance. We automatically back up every virtual machine storage volume to secure buckets, ensuring data integrity. With a global presence in over 20 regions and multiple Points of Presence (PoPs) worldwide, users can leverage multi-regional VPC peering to construct resilient, high-availability workloads. Our partnerships with premier data center providers ensure consistent power, efficient cooling, and swift access to essential components, strengthening our commitment to uninterrupted service.

## Web3-Focused Tooling

Nirvana Cloud provides developers with specialized toolsets to facilitate the transition and deployment of Web3 applications. Our core products, such as VPCs and VMs, are designed from the hardware to the networking layer with web3 workloads in mind. Our services extend to specialized storage solutions. Looking ahead, we plan to introduce sophisticated cryptographic services, critical for the burgeoning DeFi sector.

## Security & Privacy

Nirvana Cloud places a paramount focus on security and privacy. Our infrastructure is safeguarded with comprehensive encryption, a zero-trust model, continuous security assessments, and isolation techniques for user workloads. Committed to global compliance standards, Nirvana is SOC2 certified.

Furthermore, Nirvana Cloud blends software and hardware security measures designed specifically for web3 developers to ensure a robust cryptographic environment.

---

title: What are common use cases for Nirvana Cloud?

source_url:

html: https://docs.nirvanalabs.io/cloud/introduction/use-cases/

md: https://docs.nirvanalabs.io/cloud/introduction/use-cases/index.md

---

Nirvana Cloud's infrastructure is specifically constructed to excel with decentralized workloads, offering optimized performance for scenarios that require high requests per second (RPS), minimal latency, and exceptional reliability. It is designed with the foresight to handle the rigorous demands of Web3 applications, such as cryptocurrency transactions, smart contracts, and decentralized finance (DeFi) services, which necessitate swift and secure data processing capabilities.

At the same time, Nirvana Cloud is equally adept at supporting the traditional needs of Web2 applications. This ensures that organizations that rely on standard web services for e-commerce, content delivery networks, and enterprise systems can leverage Nirvana Cloud's robustness and reliability. With its dual-use capability, Nirvana Cloud is an ideal platform for businesses looking to operate within the Web2 ecosystem while preparing for the transition to or incorporation of Web3 technologies, allowing for a broad range of use cases from legacy systems to cutting-edge decentralized applications.

### Web3-specific use cases

**Decentralized Exchanges (DEXs)**: Nirvana Cloud ensures swift settlement of trades and order book updates on DEXs, enhancing user experience with its high RPS support and low latency.

**Blockchain-based Gaming Platforms**: Real-time asset transfers and game player-vs-player actions require high RPS and low latency. Nirvana Cloud enables smooth gameplay, accommodating a large number of simultaneous players.

**Decentralized Finance (DeFi) Platforms**: Financial transactions on lending or derivatives platforms need to adapt quickly to market conditions. Nirvana Cloud can support the rapid-response requirements of DeFi applications.

**Decentralized Autonomous Organizations (DAOs):** Speed and reliability are crucial for DAOs conducting voting or consensus mechanisms. DAOs on Nirvana Cloud can ensure prompt member action processing, reflecting real-time governance changes.

**Supply Chain dApps**: Decentralized supply chain solutions rely on high RPS and low latency for real-time goods movement updates, ensuring accurate tracking.

**Decentralized Content Delivery Platforms**: Nirvana Cloud's low latency guarantees uninterrupted content delivery, even during demand surges. This is crucial for web3 streaming or content-sharing platforms.

**NFT Marketplaces:** Nirvana Cloud guarantees seamless NFT listings, bids, and transfers.

**Oracles**: Oracles that provide external data to blockchains require high RPS and low latency for timely data delivery to dependent smart contracts. Nirvana Cloud meets these essential requirements.Cross-Chain Bridges: For dApps operating across multiple blockchains, swift and reliable inter-chain communication is pivotal. Nirvana Cloud facilitates smooth transfers of tokens or data across different blockchains.

**Custodial Platforms**: Secure and reliable operations are crucial for custodial platforms that hold cryptocurrencies, NFTs, or other digital assets. Nirvana Cloud provides an environment where transactions, withdrawals, and deposits are processed quickly and securely.

**Web Hosting**: Nirvana Cloud's scalable infrastructure is suitable for web hosting, supporting everything from small personal blogs to large e-commerce sites. Its decentralized nature ensures high uptime and resistance to DDoS attacks, while high RPS and low latency contribute to fast-loading web pages, critical for SEO and user satisfaction.

### Web2-specific use cases

**Databases**: Nirvana Cloud guarantees quick data access speeds for distributed databases requiring high transaction throughput, ensuring consistent performance even under heavy load. This facilitates real-time analytics and transaction processing for Web3 applications.

**Mail Servers**: Nirvana Cloud enables high availability of global Points of Presence (PoPs), making secure and efficient handling of email traffic possible. The network's decentralized nature can significantly reduce the risk of downtime and mail service disruptions.

**Applications (Frontend/Backend)**: Nirvana Cloud supports diverse application workloads, offering developers the ability to scale resources for both frontend interfaces and backend processing as needed. It delivers an agile environment to deploy microservices, APIs, and full-stack applications with consistent performance and reliability.

**Kubernetes/Containerization Environments**: Nirvana Cloud is well-suited for Kubernetes and other container orchestration systems, providing a resilient infrastructure for container deployment and management. It enhances the deployment of microservices and dynamic scaling of workloads with high efficiency and minimal latency.

**Backup Servers**: With Nirvana Cloud, backup systems become more reliable and secure, offering decentralized storage solutions to protect against data loss and outages. Its high RPS capabilities ensure quick backup and restore operations, an essential feature for disaster recovery strategies.

**File Servers**: Nirvana Cloud's file servers benefit from its decentralized structure, allowing for secure and speedy file access and sharing across the globe. High RPS allows for multiple concurrent file transfers without bottlenecks, which is vital for collaboration in Web3 spaces.

**Game Servers**: Resource and traffic-intensive games can rely on Nirvana Cloud to optimize the gaming experience by hosting game servers that require high RPS to manage the state of play for numerous concurrent users. Low latency is vital for real-time multiplayer games, ensuring fair and responsive gameplay.

By catering to these decentralized workloads with high throughput, reduced latency, and unparalleled reliability, Nirvana Cloud establishes itself as an indispensable infrastructure ally for the evolving web3 ecosystem.

---

title: Networking

source_url:

html: https://docs.nirvanalabs.io/cloud/networking/

md: https://docs.nirvanalabs.io/cloud/networking/index.md

---

Networking plays an integral role in all use cases, as it enables seamless connectivity and communication of the various components within your deployed architecture. Nirvana Cloud provides a variety of networking tools and services that can be leveraged for efficient, reliable, and secure communication.

## Virtual Private Cloud (VPC)

One essential feature of Nirvana Cloud is its Virtual Private Cloud (VPC), which allows you to provision a logically isolated section of the cloud. The VPC provides the environment within which you can deploy your applications securely and is isolated from other Virtual Private Clouds.

## Site to Site Mesh

A site-to-site mesh involves interconnecting subnets in different locations via our mesh network enabling a secure communication channel between them. It is analogous to extending a private network across the internet and allowing resources to communicate as if these locations are on the same local network.

Overall, Nirvana Cloud's robust networking features are designed to provide scalable and secure environments for businesses to deploy applications and databases seamlessly. To ensure optimal performance and security, understanding the functioning of Nirvana Cloud's networking is crucial.

---

title: Overview

source_url:

html: https://docs.nirvanalabs.io/cloud/networking/connect/

md: https://docs.nirvanalabs.io/cloud/networking/connect/index.md

---

Nirvana Connect is a dedicated, low-latency, private interconnect fabric that links Nirvana Cloud directly to external cloud providers such as AWS, GCP, and Azure. Unlike the public internet, Nirvana Connect establishes dedicated fiber circuits through reserved private network paths, enabling secure, high-performance data transfer with predictable latency and enhanced reliability.

## How It Works

Nirvana Connect leverages a Tier 1 global infrastructure provider to provision private virtual circuits that directly connect Nirvana Cloud to major cloud providers. These circuits use existing fiber infrastructure already laid between data centers and cloud providers.

Instead of installing new physical cables, Nirvana reserves bandwidth on these private links to create dedicated, secure, high-speed connections between environments. Because data travels through a private path rather than the public internet, traffic avoids standard egress fees, reduces congestion, and gains stable, predictable throughput.

Nirvana covers the infrastructure costs, so you don't have to.

## Key Features

- **Private fiber connections** between Nirvana Cloud and major cloud providers (AWS, GCP, Azure)

- **Sub-millisecond latency** unaffected by public traffic

- **Pre-provisioned infrastructure** requiring no new physical installation

- **Quick activation** through the dashboard, API, Terraform and all SDKs

- **Multiple bandwidth tiers** from 50 Mbps to 2 Gbps

## Benefits

### Eliminate Egress Fees

By bypassing the public internet, Nirvana Connect removes standard egress charges entirely. This can reduce data transfer costs by up to 90% compared to traditional public internet routing between cloud environments.

### Lower Latency

Dedicated private circuits deliver sub-millisecond latency unaffected by public traffic. Fewer network hops and direct connectivity ensure consistent uptime for latency-sensitive operations.

### Enhanced Security

Data traverses private, isolated network paths rather than the public internet. This isolation provides enhanced security and privacy while preserving your existing IP and security rules. It also makes it easier to maintain compliance across multi-cloud environments.

### Stable, Predictable Throughput

Private circuits maintain reliable performance independent of public network congestion. This stability is critical for high-frequency trading, real-time systems, and other operations that require consistent network behavior.

### Multi-Cloud Flexibility

Seamlessly integrate multiple cloud providers without being tied to a single ecosystem. Offload Web3-intensive workloads to Nirvana Cloud while maintaining existing cloud setups on AWS, Azure, or GCP.

## Use Cases

### Blockchain Node Operations

Run blockchain nodes on Nirvana Cloud while hosting indexers, frontends, or supporting services on other cloud providers. Nirvana Connect ensures high-bandwidth, stable connectivity between these components while keeping node infrastructure isolated from the public internet.

### High-Frequency Trading

HFT operations in Web3 depend on ultra-fast, deterministic latency between trading systems and blockchain nodes. Nirvana Connect provides dedicated, low-latency circuits, redundant paths, and congestion-free routing to optimize execution speed and reliability.

### Kubernetes Cluster Mesh

Teams running Kubernetes across multiple clouds can use Nirvana Connect to enable CNI-level networking (e.g., with Cilium), allowing pods and services to communicate seamlessly across providers. Direct, secure connectivity improves service mesh performance, reduces latency, and lowers inter-cluster traffic costs.

### Hybrid Multi-Cloud Architectures

Host Elasticsearch on Nirvana while ingesting data from third-party providers. Run RPC nodes on Nirvana while maintaining AWS application services. Any scenario requiring large-volume data transfer between clouds at scale benefits from private connectivity.

## Supported Regions

Nirvana Connect currently operates private network hubs in:

- Seattle (us-sea-1)

- Silicon Valley (us-sva-1)

- Chicago (us-chi-1)

- Washington, D.C. (us-wdc-1)

- Frankfurt (eu-frk-1)

- Singapore (ap-sin-1)

- Seoul (ap-seo-1)

- Tokyo (ap-tyo-1)

## Supported Cloud Providers

Currently, Nirvana Connect supports direct interconnect to **AWS**. Additional providers are coming soon.

## Pricing

Nirvana Connect is currently in a trial period, free for all Nirvana Cloud users. Pricing will be introduced after the trial, with options for partners and dedicated interconnects.

## Next Steps

Ready to set up your private connection? Follow the [Set up AWS Direct Connect](/cloud/networking/connect/how-to/set-up-aws-direct-connect/) guide to create your first connection between Nirvana Cloud and AWS.

For more details, see the [FAQ](/cloud/networking/connect/faq/).

## FAQ

What bandwidth options does Nirvana Connect support?

Nirvana Connect currently supports the following bandwidth tiers:

- 50 Mbps

- 200 Mbps

- 500 Mbps

- 1 Gbps

- 2 Gbps

How much can I save?

By eliminating per-GB egress billing, Nirvana Connect can reduce data transfer costs by up to 90% compared to traditional public internet routing between cloud environments.

There are no Nirvana egress fees, and AWS egress rates over Direct Connect are typically around $0.02 per GB, compared to roughly $0.09 per GB over the public internet.

Where is the private fiber from?

Nirvana Connect runs on private fiber provided through Nirvana's long-term infrastructure partner, linking Nirvana Cloud directly to major cloud providers.

---

title: FAQ

source_url:

html: https://docs.nirvanalabs.io/cloud/networking/connect/faq/

md: https://docs.nirvanalabs.io/cloud/networking/connect/faq/index.md

---

## What is Nirvana Connect?

Nirvana Connect is a private interconnect service (aka a private internet connection) that establishes dedicated fiber circuits between Nirvana Labs, Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP), and other major cloud providers.

Unlike the public internet, Nirvana Connect links these environments through reserved private network paths, **enabling** secure, high-performance data transfer with predictable latency and enhanced reliability. This integration allows applications and data to move freely between multiple clouds, letting teams treat them as a single, unified network.

## How does Nirvana Connect work?

Nirvana Connect works by leveraging a Tier 1 global infrastructure provider to provision private virtual circuits that directly connect Nirvana Cloud to major cloud providers like Amazon Web Services (AWS) and Google Cloud Platform (GCP).

These circuits use existing fiber infrastructure already laid between data centers and the cloud providers. Instead of installing new physical cables, Nirvana reserves bandwidth on these private links to create dedicated, secure, high-speed connections between environments — giving users faster, more reliable network performance and meaningful cost savings.

In short, Nirvana covers the infrastructure costs, so you don't have to.

Because data travels through a private path rather than the public internet, customers avoid standard egress fees, reduce congestion, and gain stable, predictable throughput. Customers can self-provision these circuits through the Nirvana Cloud dashboard or API, enabling flexible and scalable connectivity.

## Who is Nirvana Connect for?

Nirvana Connect is available to all Nirvana Cloud users.

It's designed for teams running workloads such as:

- Node runners and RPC infrastructure

- Indexers and blockchain analytics

- Cross-chain infrastructure and messaging

- Real-time backend and transaction systems

- High-frequency and latency-sensitive applications

## What are the benefits of using Nirvana Connect?

**Lower latency and high availability**

- Dedicated private circuits provide predictable, low-latency performance compared to public internet.

- Fewer network hops and direct connectivity ensure consistent uptime for latency-sensitive operations.

**Enhanced security and compliance**

- Data traverses private, isolated network paths for enhanced security and privacy.

- Easier to maintain compliance across multi-cloud environments.

**Flexible workload management**

- Offload Web3-intensive workloads to Nirvana Cloud while maintaining existing cloud setups on AWS, Azure, or GCP.

- Efficiently allocate resources across providers based on performance or regulatory needs.

**Cost optimization**

- By bypassing the public internet, Nirvana Connect avoids standard egress charges.

- Greater cost savings at higher transfer volumes.

**Freedom from vendor lock-in**

- Seamlessly integrate multiple cloud providers without being tied to a single ecosystem.

## What are common use cases for Nirvana Connect?

**High-Frequency Trading**

HFT operations in Web3 depend on ultra-fast, deterministic latency between trading systems and blockchain nodes.

Nirvana Connect provides dedicated, low-latency circuits, redundant paths, and congestion-free routing to optimize execution speed and reliability.

**Blockchain Node Operations**

Many organizations run blockchain nodes on Nirvana Cloud while hosting indexers, frontends, or supporting services on other cloud providers.

Nirvana Connect ensures high-bandwidth, stable connectivity between these components while keeping node infrastructure isolated from the public internet.

**Kubernetes Cluster Mesh**

Teams running Kubernetes across multiple clouds can use Nirvana Connect to enable CNI-level networking (e.g., with Cilium), allowing pods and services to communicate seamlessly across providers.

Direct, secure connectivity improves service mesh performance, reduces latency, and lowers inter-cluster traffic costs.

## How much can I save?

By eliminating per-GB egress billing, Nirvana Connect can reduce data transfer costs by up to 90% compared to traditional public internet routing between cloud environments.

There are no Nirvana egress fees, and AWS egress rates over Direct Connect are typically around $0.02 per GB, compared to roughly $0.09 per GB over the public internet.

## Where does Nirvana Connect currently operate network hubs?

Nirvana Connect currently operates private network hubs in:

- Seattle (us-sea-1)

- Silicon Valley (us-sva-1)

- Chicago (us-chi-1)

- Washington, D.C. (us-wdc-1)

- Frankfurt (eu-frk-1)

- Singapore (ap-sin-1)

- Seoul (ap-seo-1)

- Tokyo (ap-tyo-1)

These hubs support enterprise and latency-sensitive workloads, providing direct, private connectivity between Nirvana Cloud and major cloud providers.

## How many cloud providers does Nirvana Connect support?

Currently, Nirvana Connect supports direct interconnect to AWS. Support for GCP can be enabled on demand in under 10 minutes.

Nirvana Connect can also support additional providers, including Microsoft Azure and Alibaba Cloud, as the network fabric is designed to scale flexibly to multiple providers.

## Where is the private fiber from?

Nirvana Connect runs on private fiber provided through Nirvana's long-term infrastructure partner, linking Nirvana Cloud directly to major cloud providers.

## What's the difference between Nirvana Connect and Nirvana Network?

Nirvana Connect supports self-connections, linking your own environments between Nirvana Cloud and other cloud providers.

Nirvana Network (coming soon) will enable cross-company private connections, allowing different Web3 providers on Nirvana Cloud to exchange data over the same private fiber backbone.

## What bandwidth options does Nirvana Connect support?

Nirvana Connect currently supports the following bandwidth tiers:

- 50 Mbps

- 200 Mbps

- 500 Mbps

- 1 Gbps

- 2 Gbps

## Is there a cost to use Nirvana Connect?

Nirvana Connect is currently in a trial period, free for all Nirvana Cloud users.

Pricing will be introduced after the trial, with options for partners and dedicated interconnects.

## How can I get started?

If you're already using Nirvana Cloud, you can activate Nirvana Connect directly from your dashboard or API.

New partners can [reach out to the team](https://nirvanalabs.io/contact) for onboarding support.

---

title: Set up AWS Direct Connect

source_url:

html: https://docs.nirvanalabs.io/cloud/networking/connect/how-to/set-up-aws-direct-connect/

md: https://docs.nirvanalabs.io/cloud/networking/connect/how-to/set-up-aws-direct-connect/index.md

---

In this step-by-step guide, you’ll learn how to set up Nirvana Connect to create a secure, private connection between your Nirvana VPC and your AWS (or any other cloud provider) environment.

Whether you’re logging in for the first time or configuring a production-grade private link, this guide will walk you through the entire process, from signing in, creating your first VPC, and provisioning a connection, to completing the setup on AWS.

## Step 0: Log in or Sign Up

1. Visit [dashboard.nirvanalabs.io](https://dashboard.nirvanalabs.io)

2. Log in with your credentials or click **Sign up** to create a new account

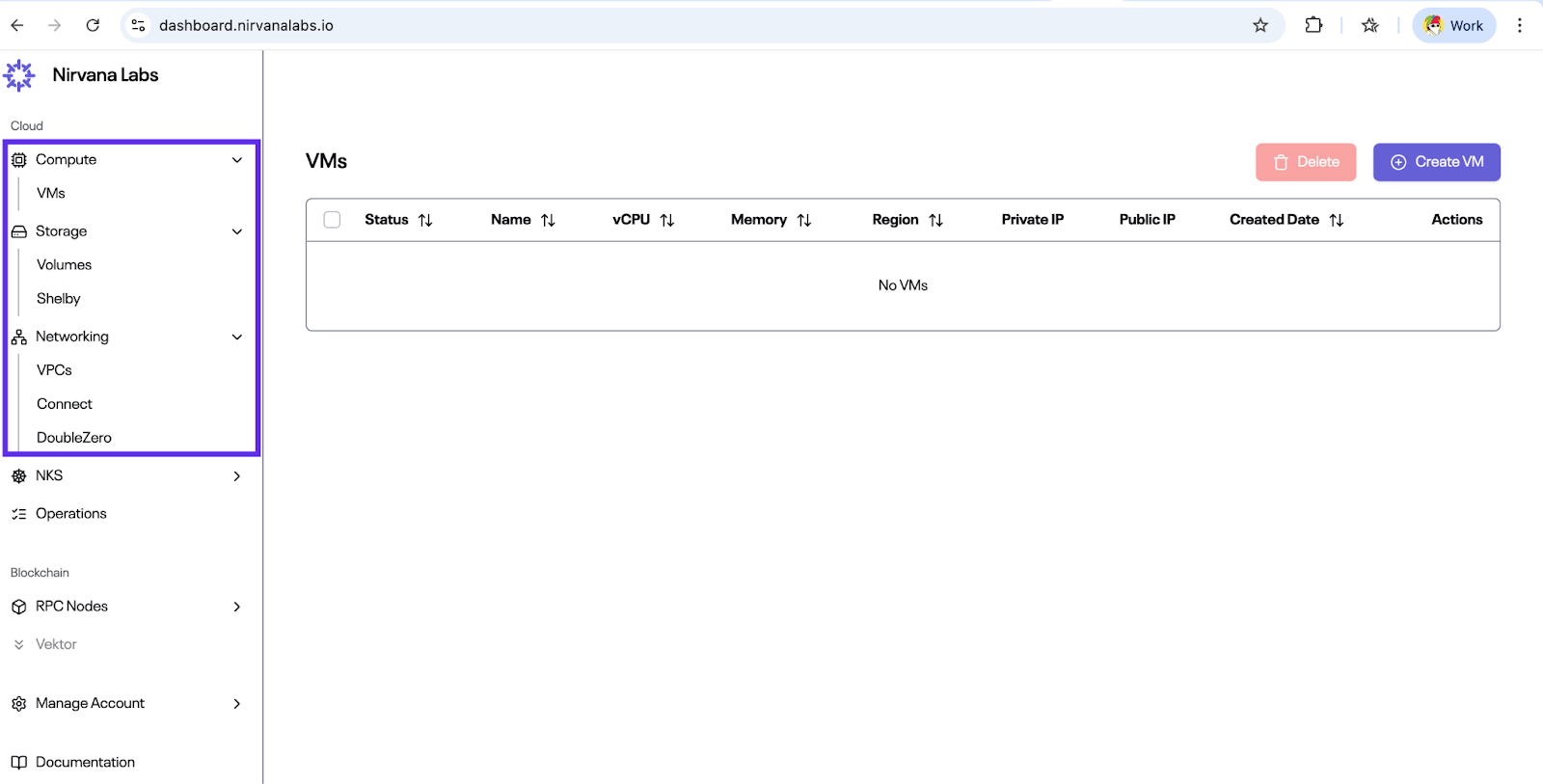

3. Once logged in, click **Cloud Dashboard** in the sidebar to access the main control panel

You will now see the **Compute**, **Storage**, and **Networking** menus in the navigation panel.

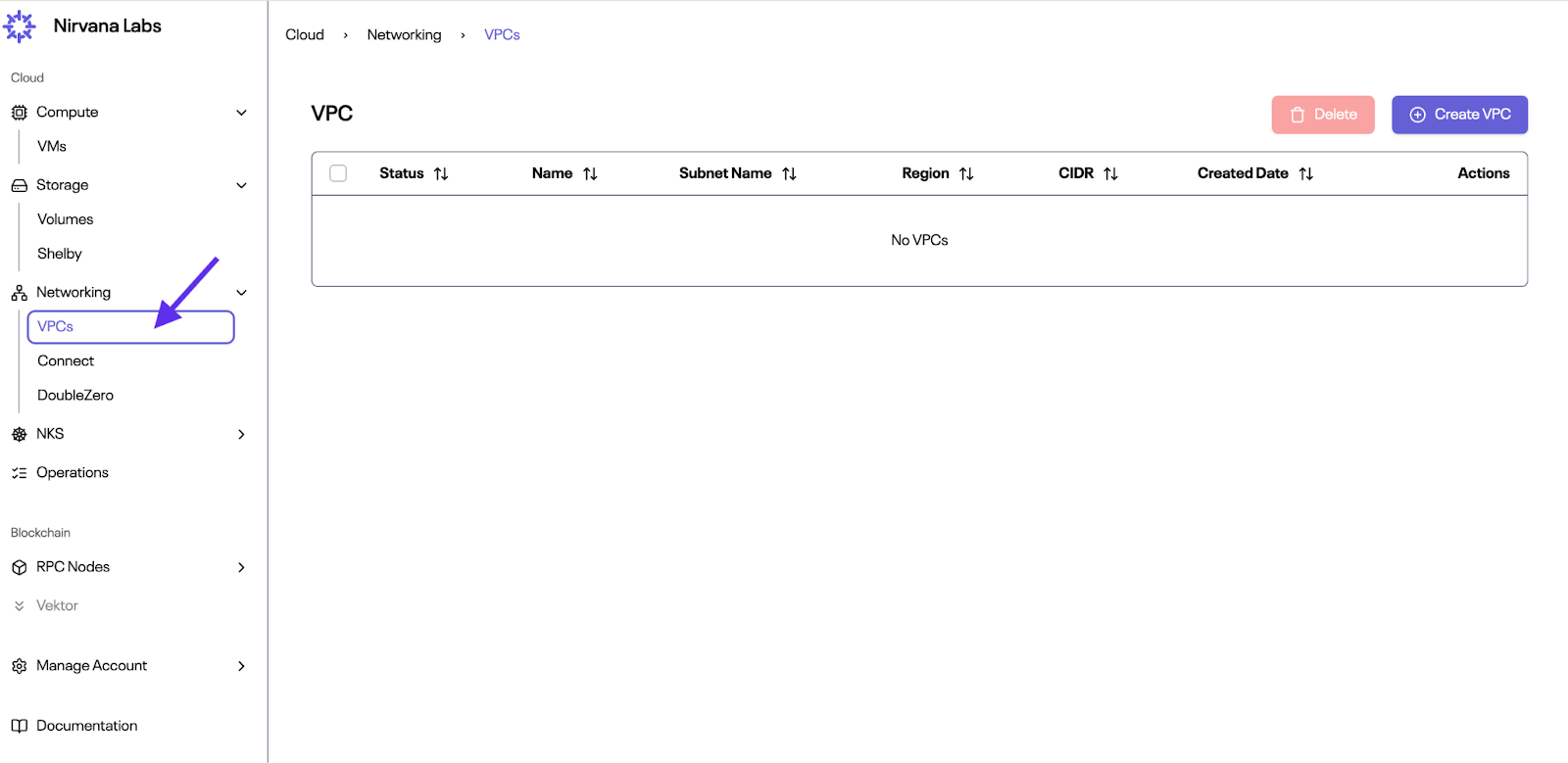

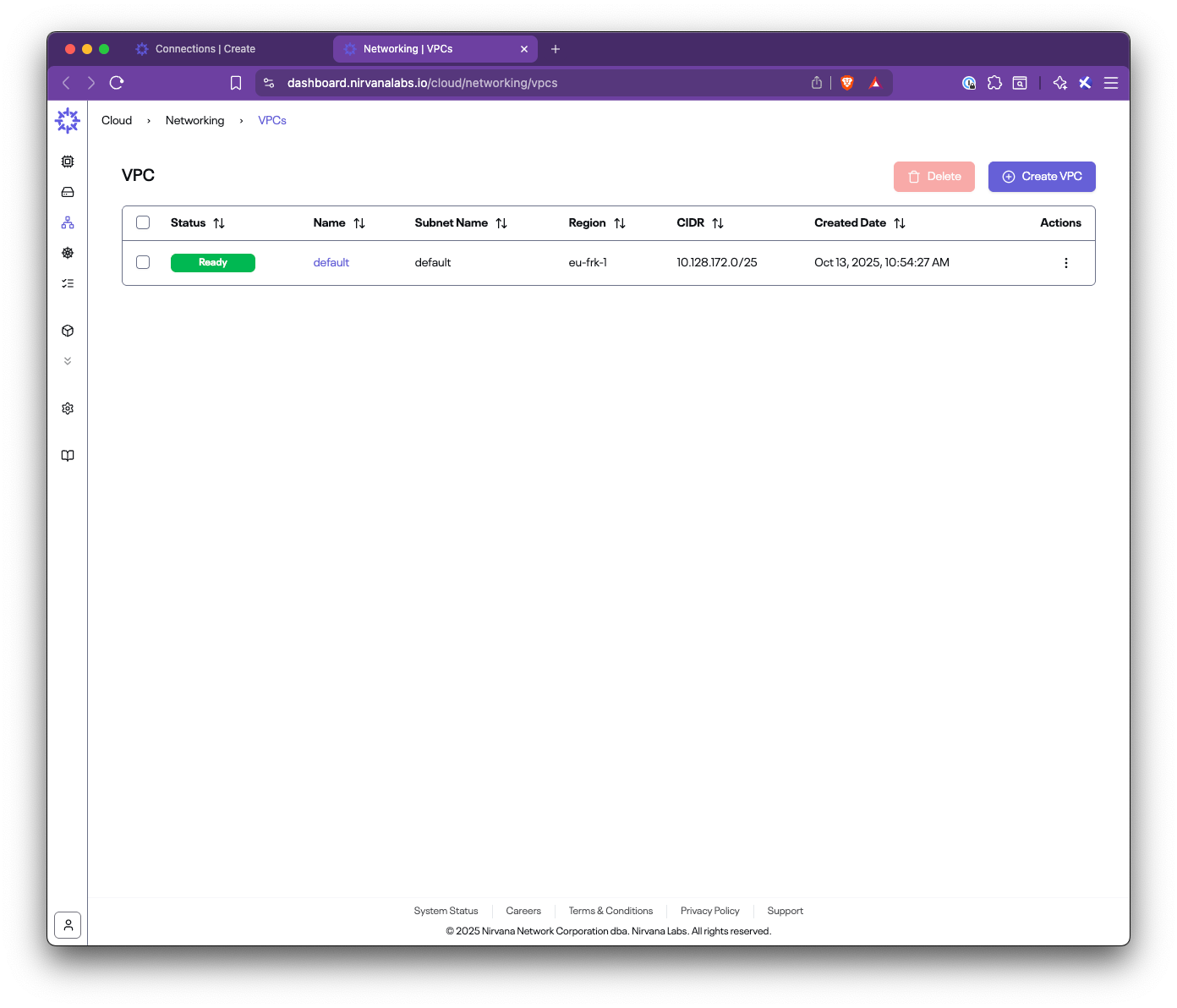

## Step 1: Create (or Verify) Your VPC

1. Navigate to **Networking → VPCs**

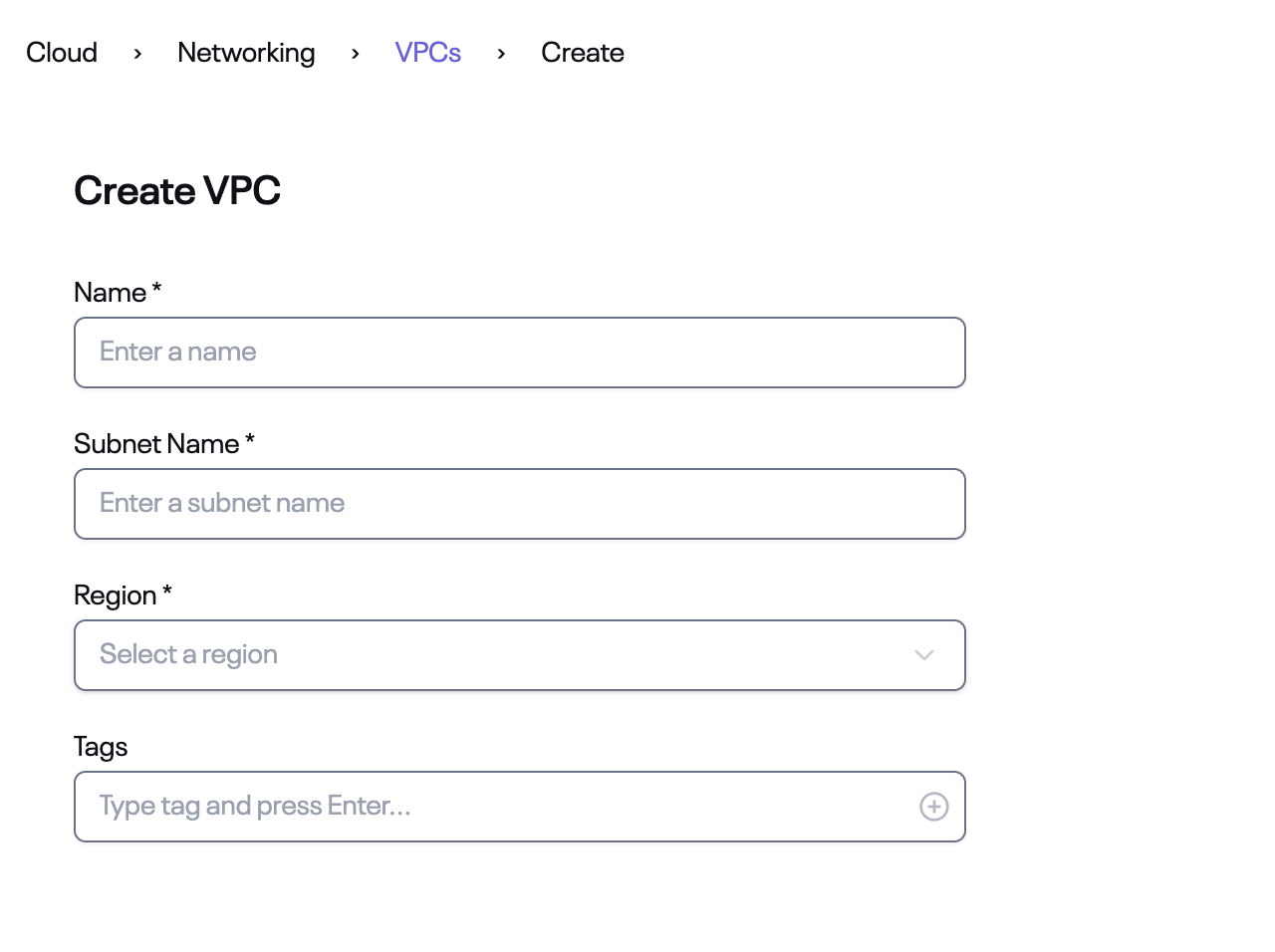

2. If this is your first time, the table will be empty. Click **Create VPC**

3. Fill in:

- Name

- Subnet Name

- Region

- (Optional) Tags

4. Click **Create**. Once created, the VPC will appear with **Status: Ready**

:::note

You need an existing VPC before creating a connection. Its CIDR will be advertised to AWS during the setup.

:::

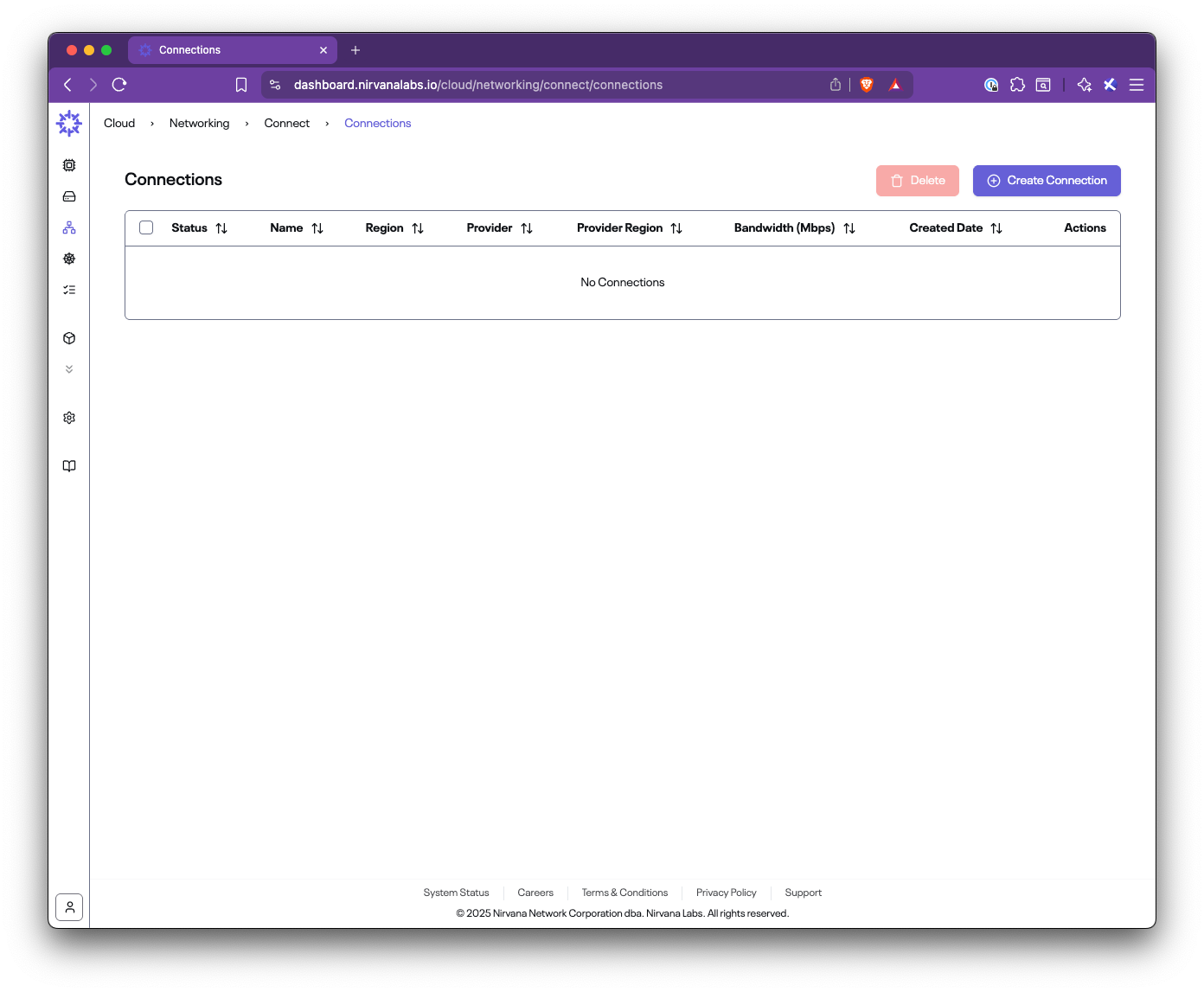

## Step 2: Create a Connection

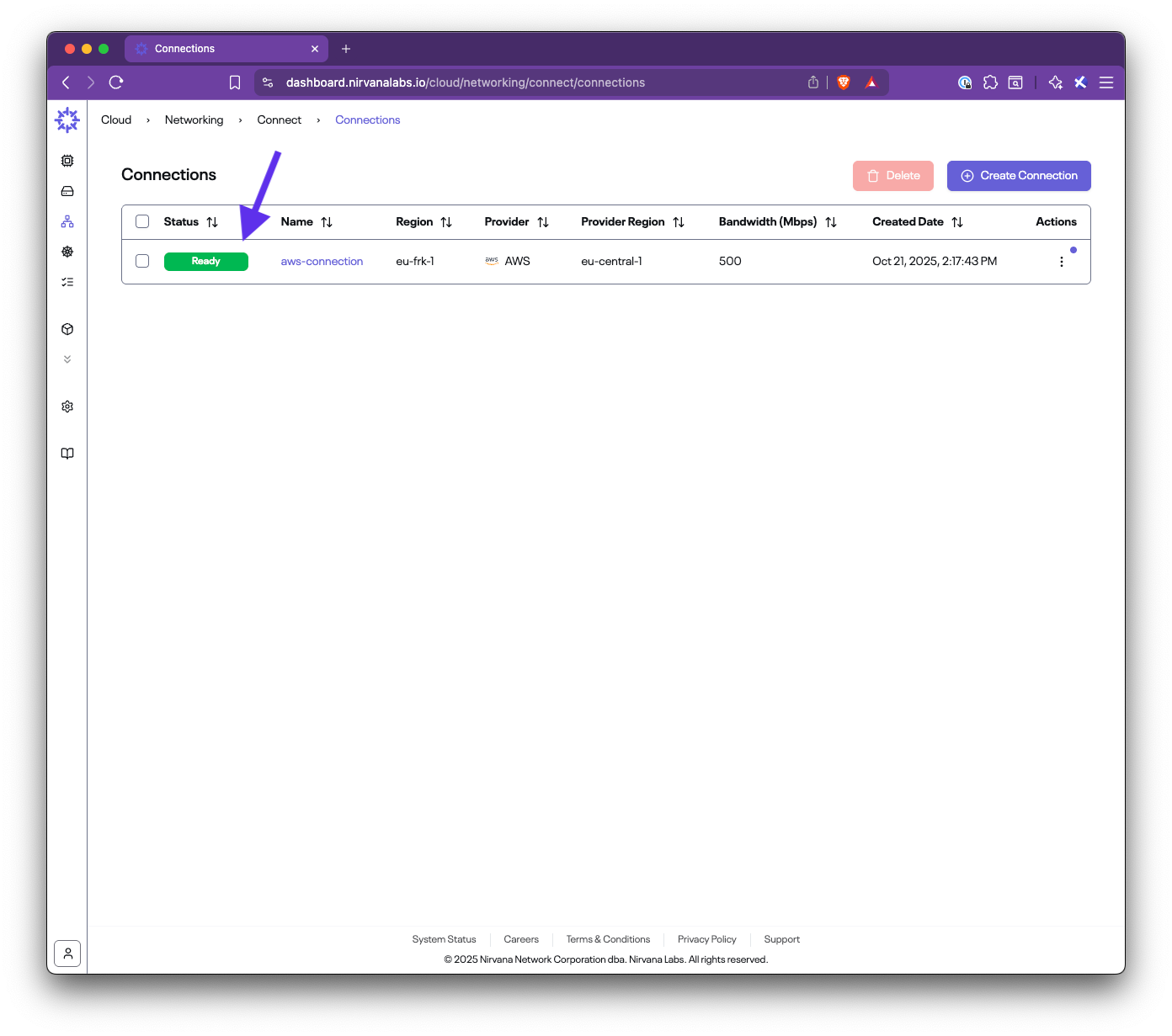

1. Navigate to **Networking → Connect → Connections**

2. If this is your first time, the table will be empty. Click **Create Connection**

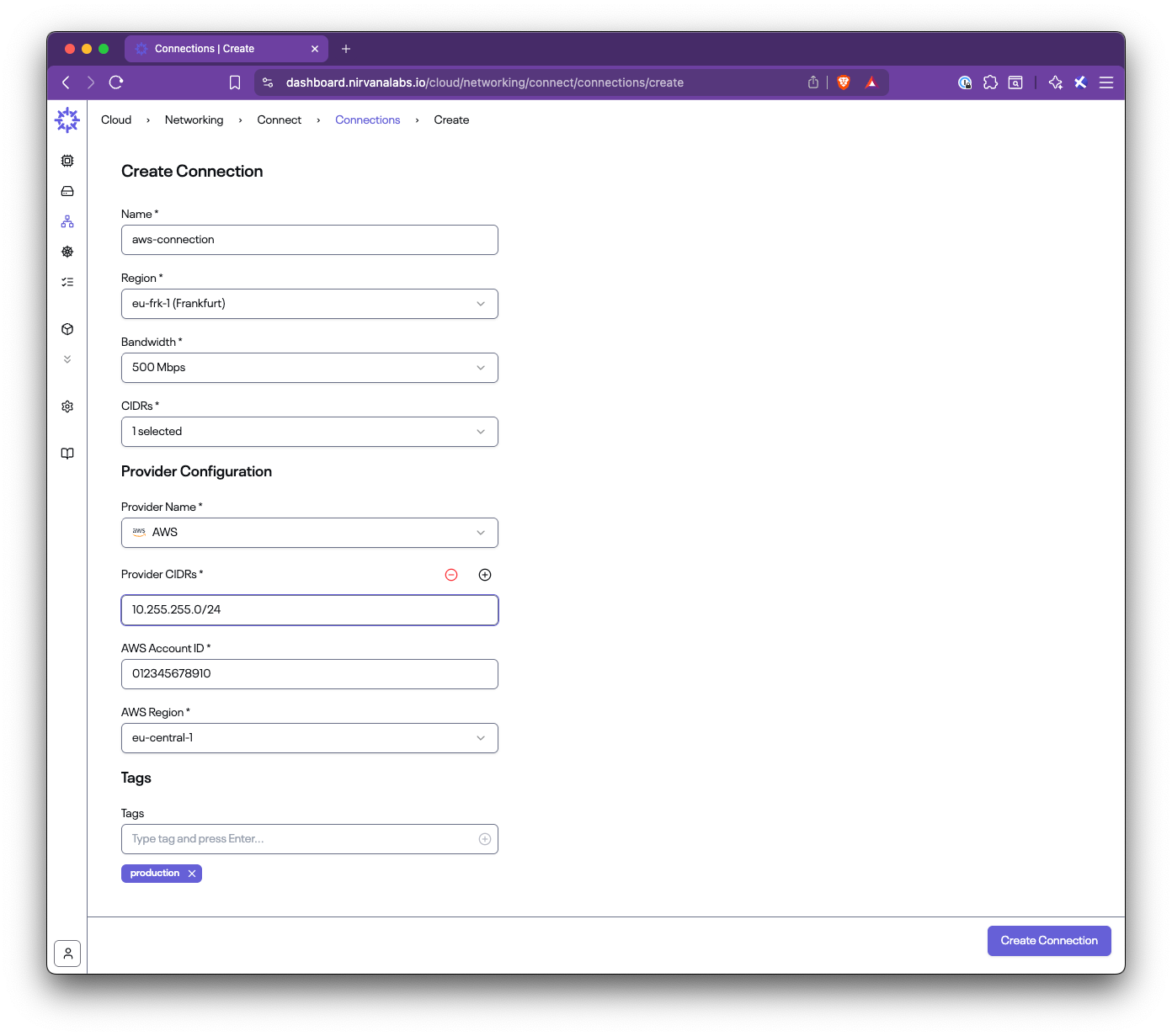

3. Fill out the form:

- **Name**: A descriptive connection name (e.g., `aws-connection`)

- **Region**: The Nirvana region where your VPC is located (e.g., `eu-frk-1`)

- **Bandwidth**: Choose the capacity (e.g., 50, 100, 500 Mbps)

- **CIDRs**: Select your Nirvana VPC CIDR

- **Provider Name**: AWS

- **Provider CIDRs**: Enter the AWS VPC CIDR or prefixes behind your TGW/VGW

- **AWS Account ID**: Your 12-digit AWS account ID

- **AWS Region**: The AWS region to connect to (e.g., `eu-central-1`)

- **Tags**: Optional metadata

4. Click **Create Connection**

:::tip

**Provider CIDRs** represent the AWS-side networks you want Nirvana to route to. Nirvana will program routes on its end using this field. Nirvana VPC CIDRs and Provider CIDRs must not overlap.

:::

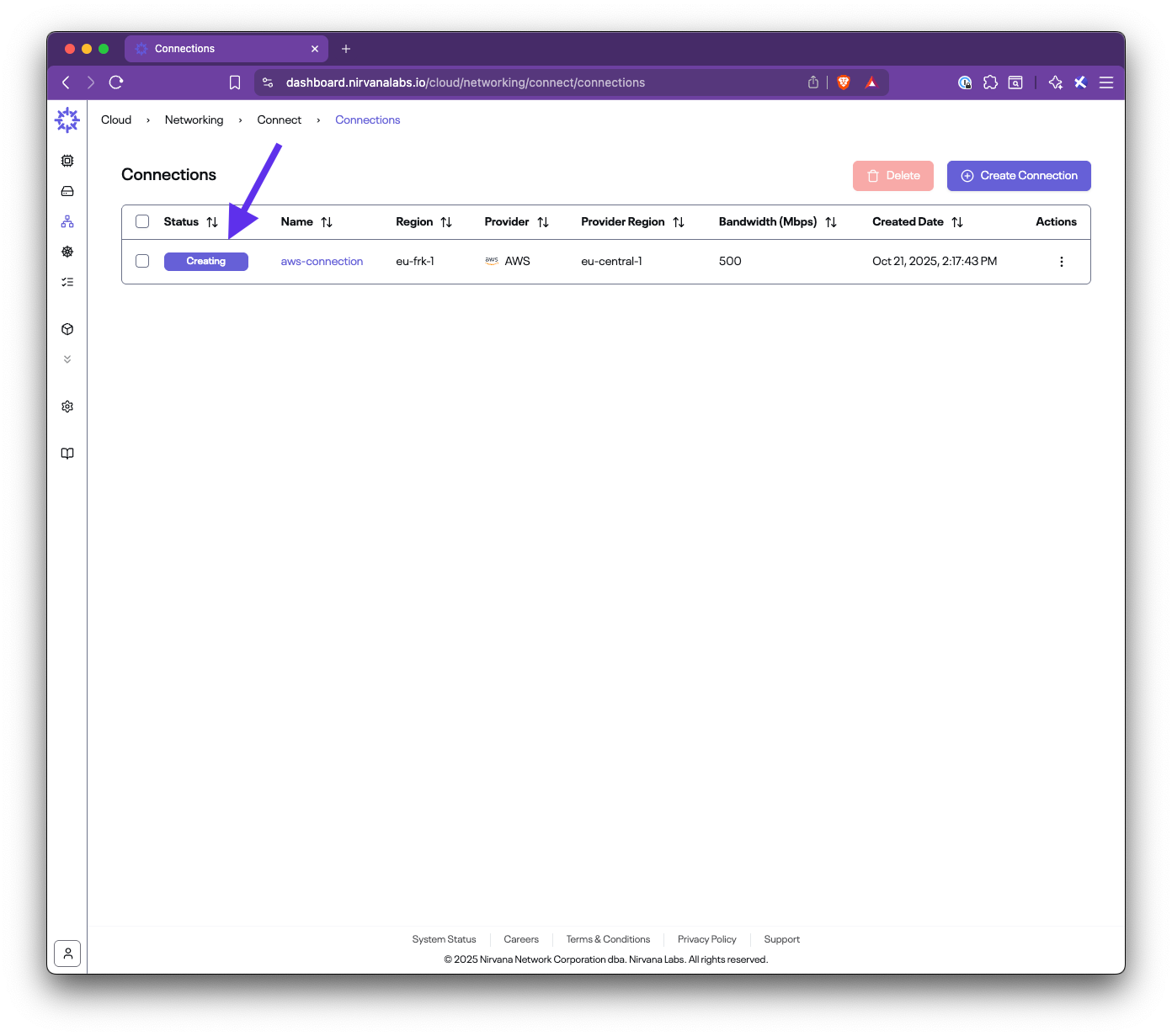

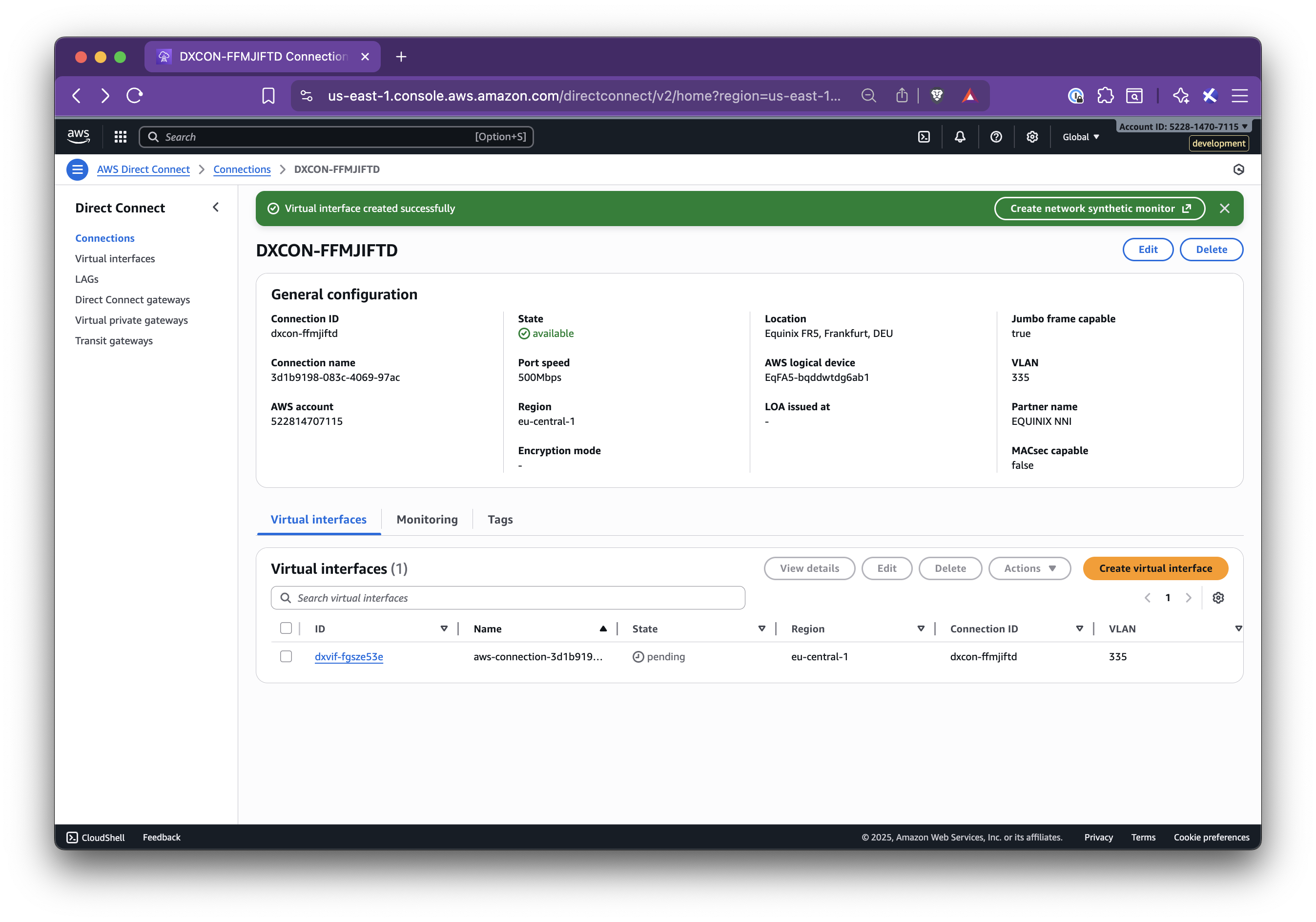

## Step 3: Check Connection Status and Parameters

1. After creating the connection, you will see it listed with **Status: Creating**

2. Once provisioning is complete, the status will change to **Ready**

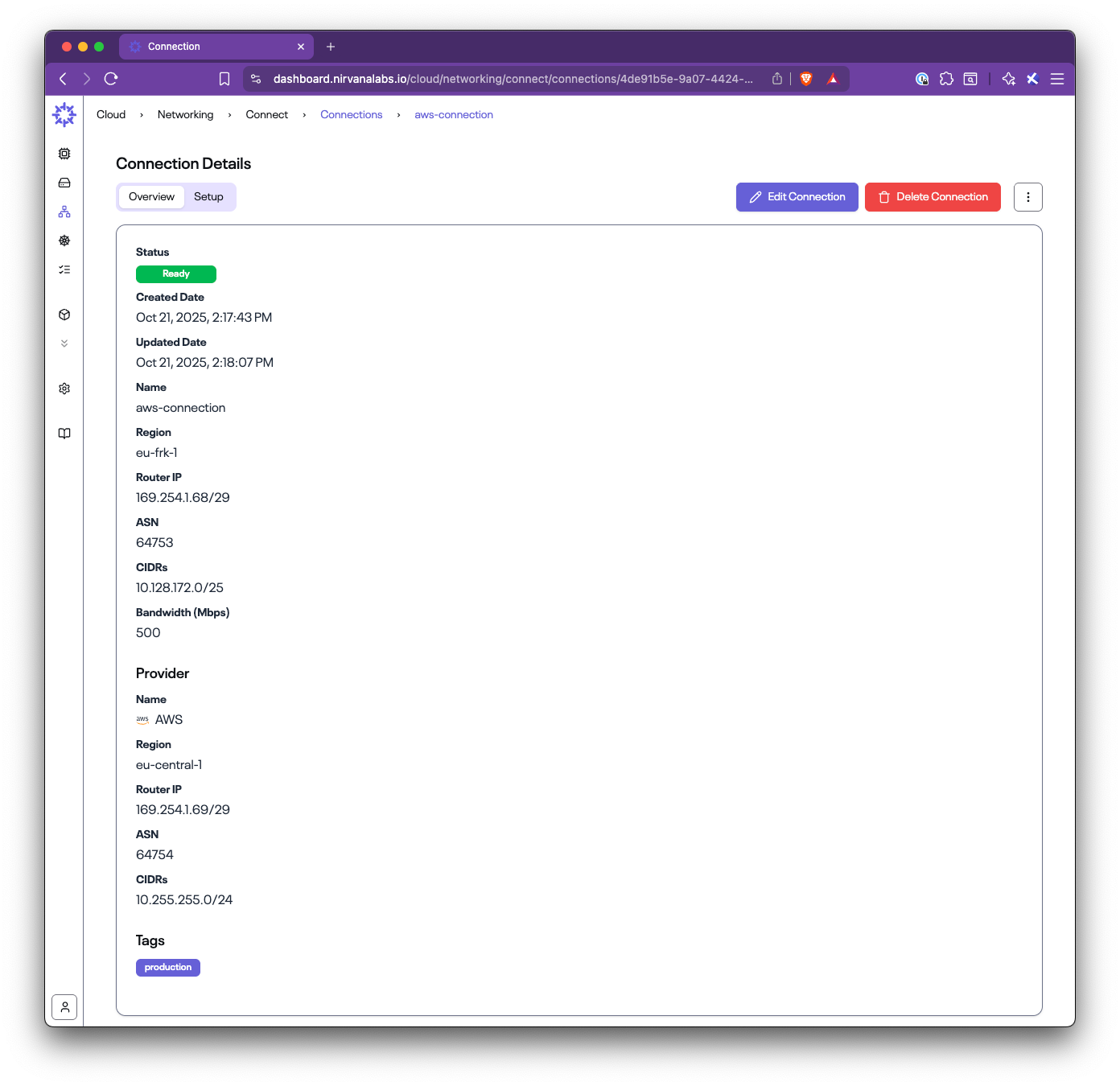

3. View connection details:

- Router IPs (Nirvana and AWS sides)

- ASNs for BGP configuration

- CIDRs

- Bandwidth (50 Mbps / 200 Mbps / 500 Mbps / 1 Gbps / 2 Gbps)

- Provider details

You will use these values when configuring AWS.

## Step 4: Run the Setup on AWS

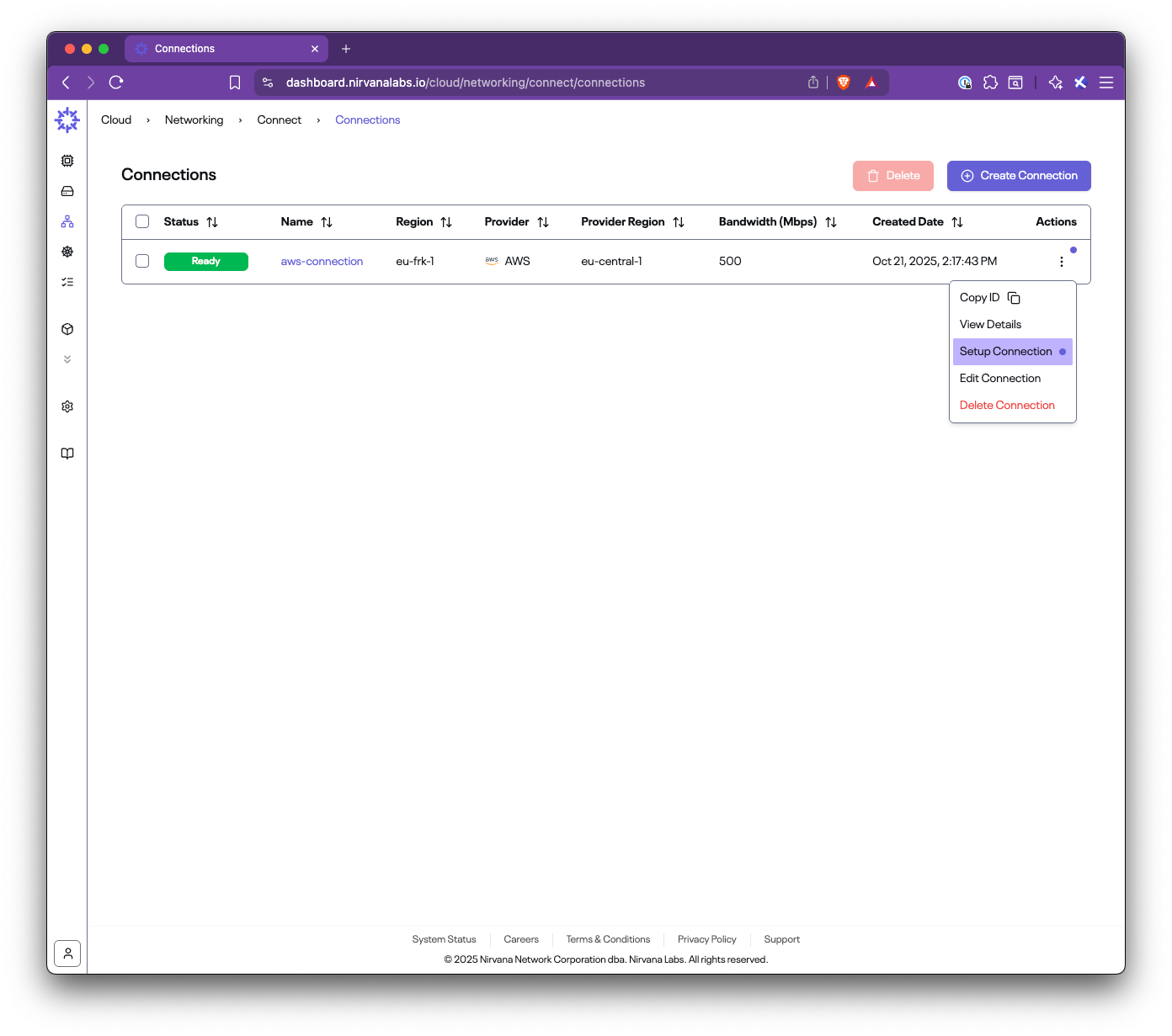

From the connection list, open the menu under **Actions** and select **Setup Connection** (or open the Setup tab in the details view).

Follow the guided steps:

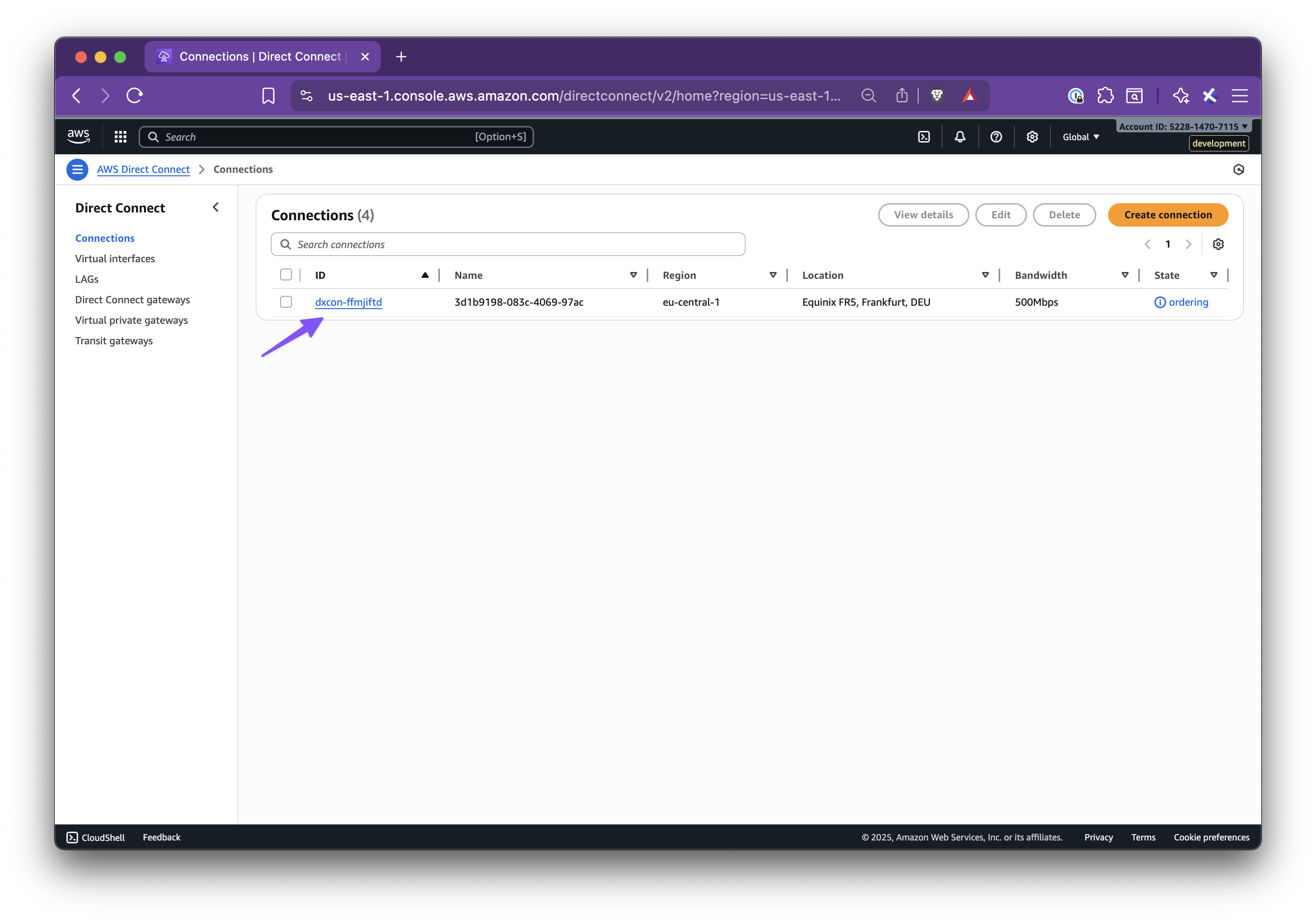

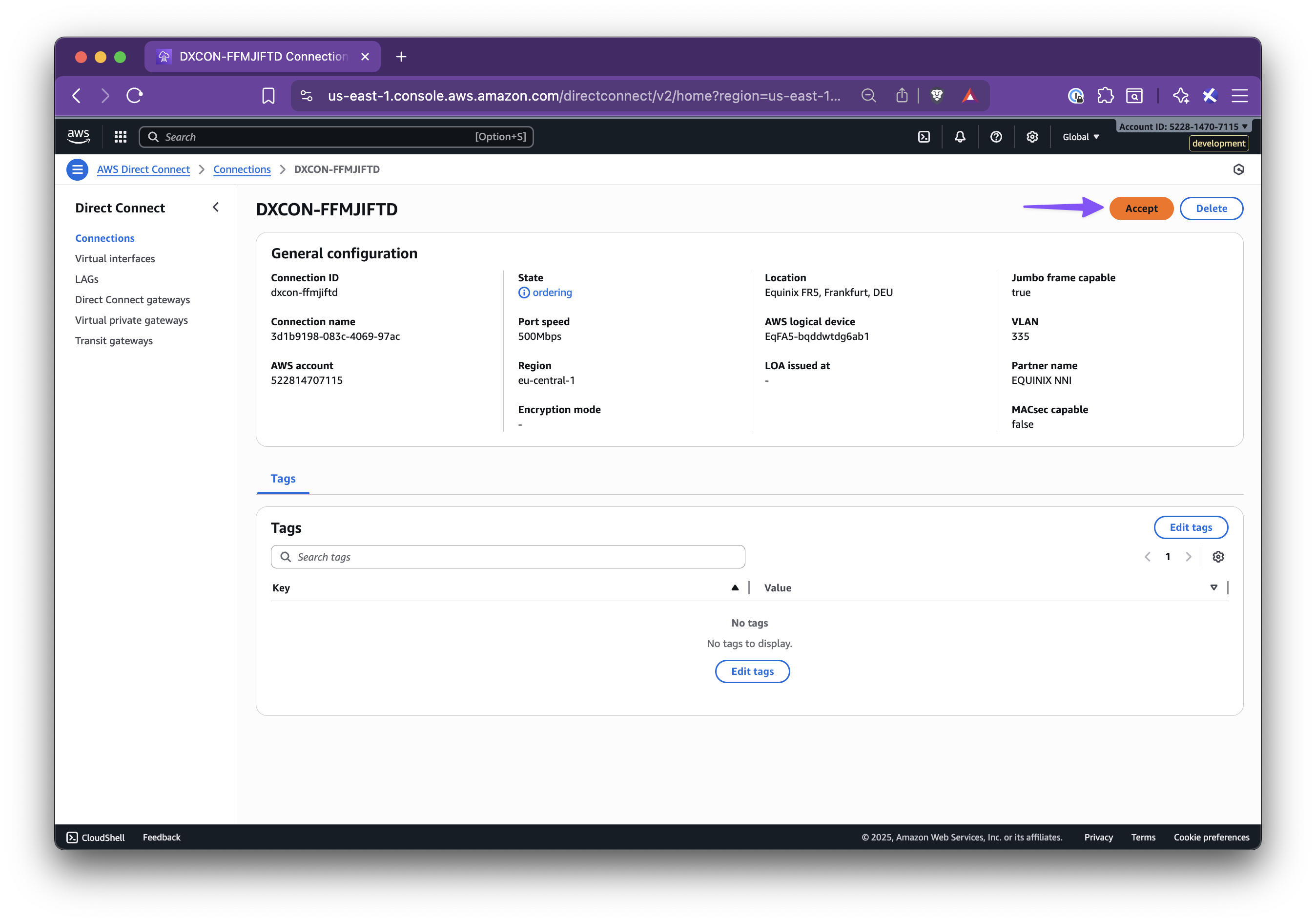

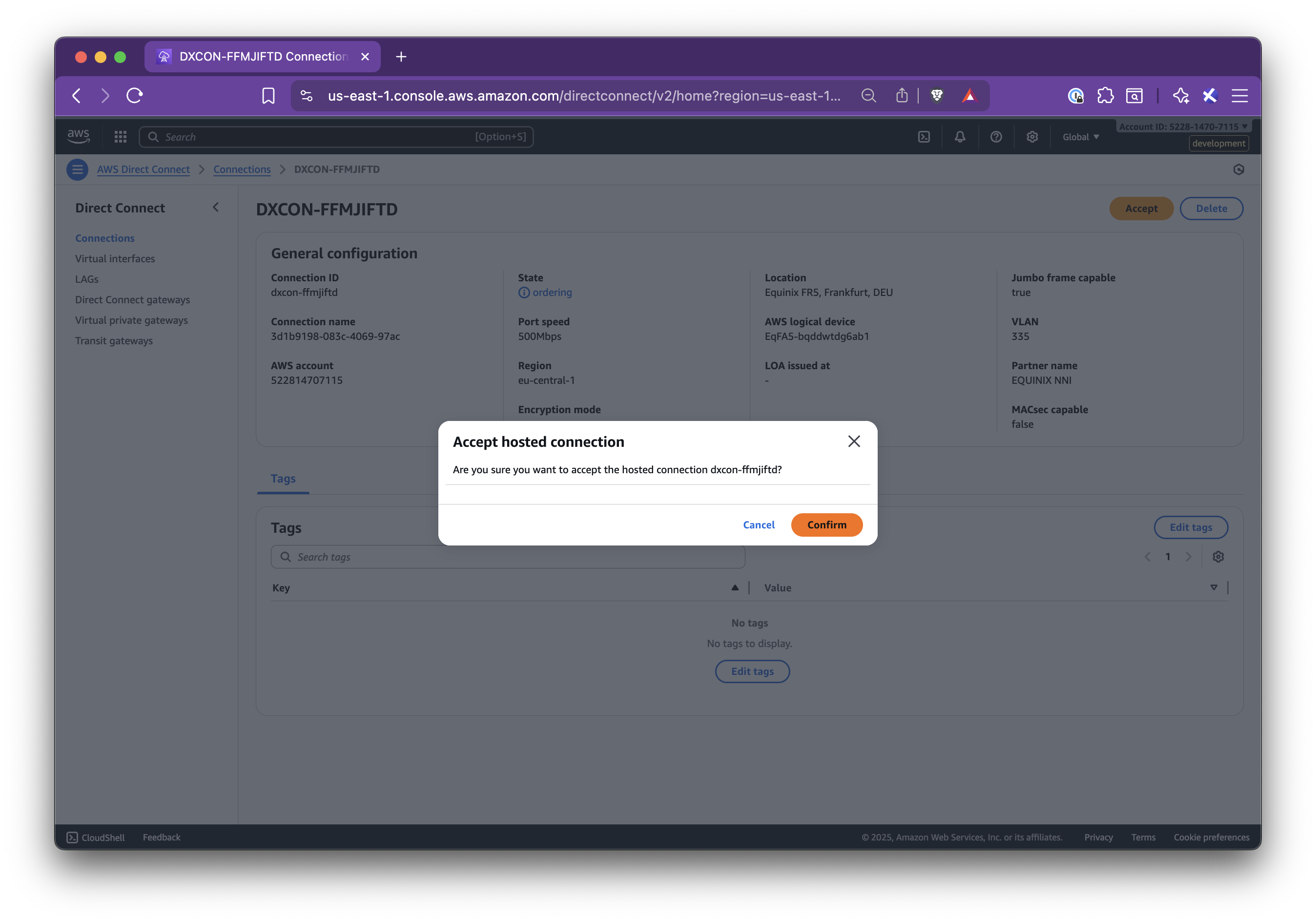

### Step 4.1: Accept the connection in AWS Direct Connect

1. Open the AWS Direct Connect console

2. Go to **Connections**

3. Find the pending connection with the name shown in the Setup panel

4. Accept and confirm

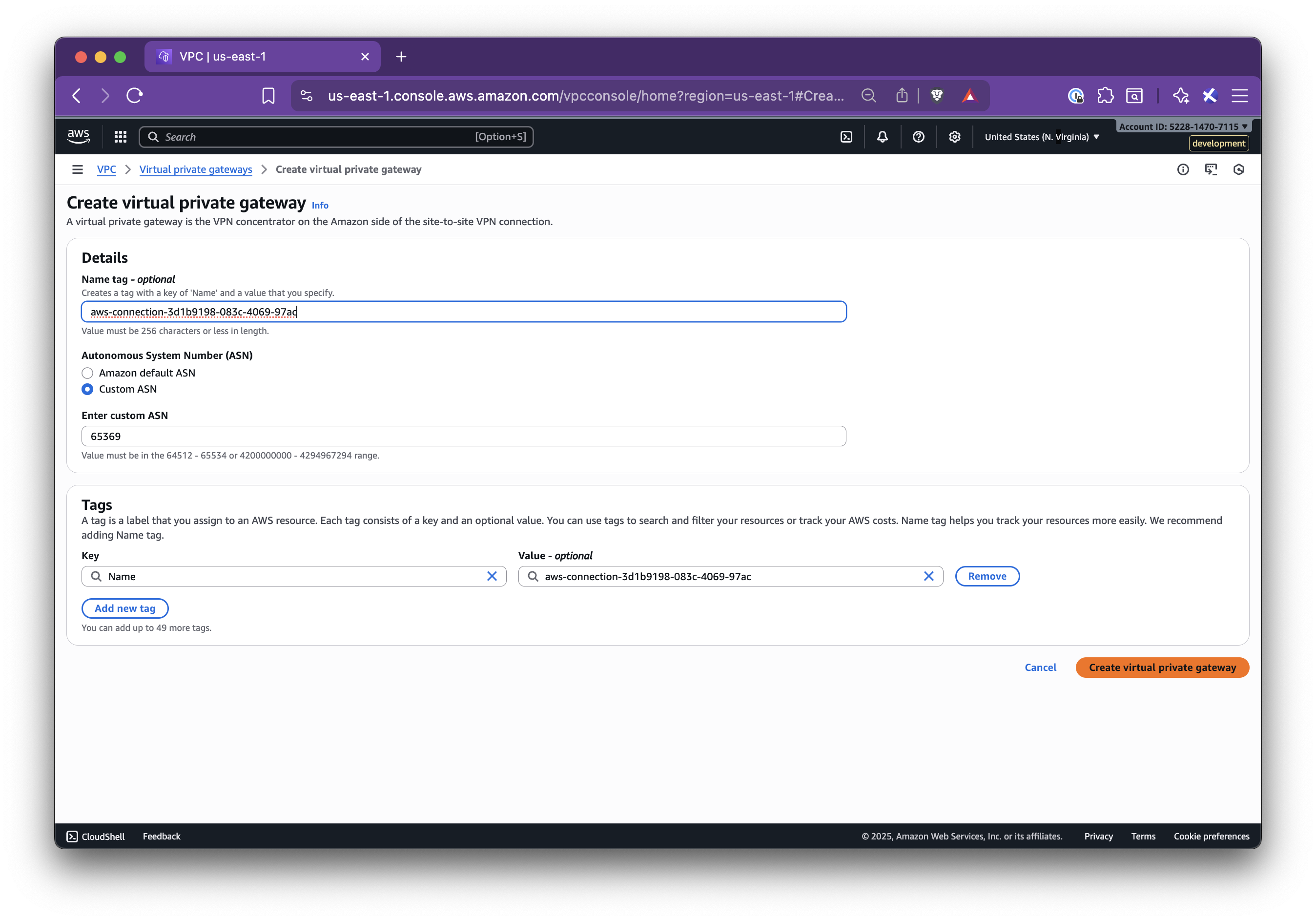

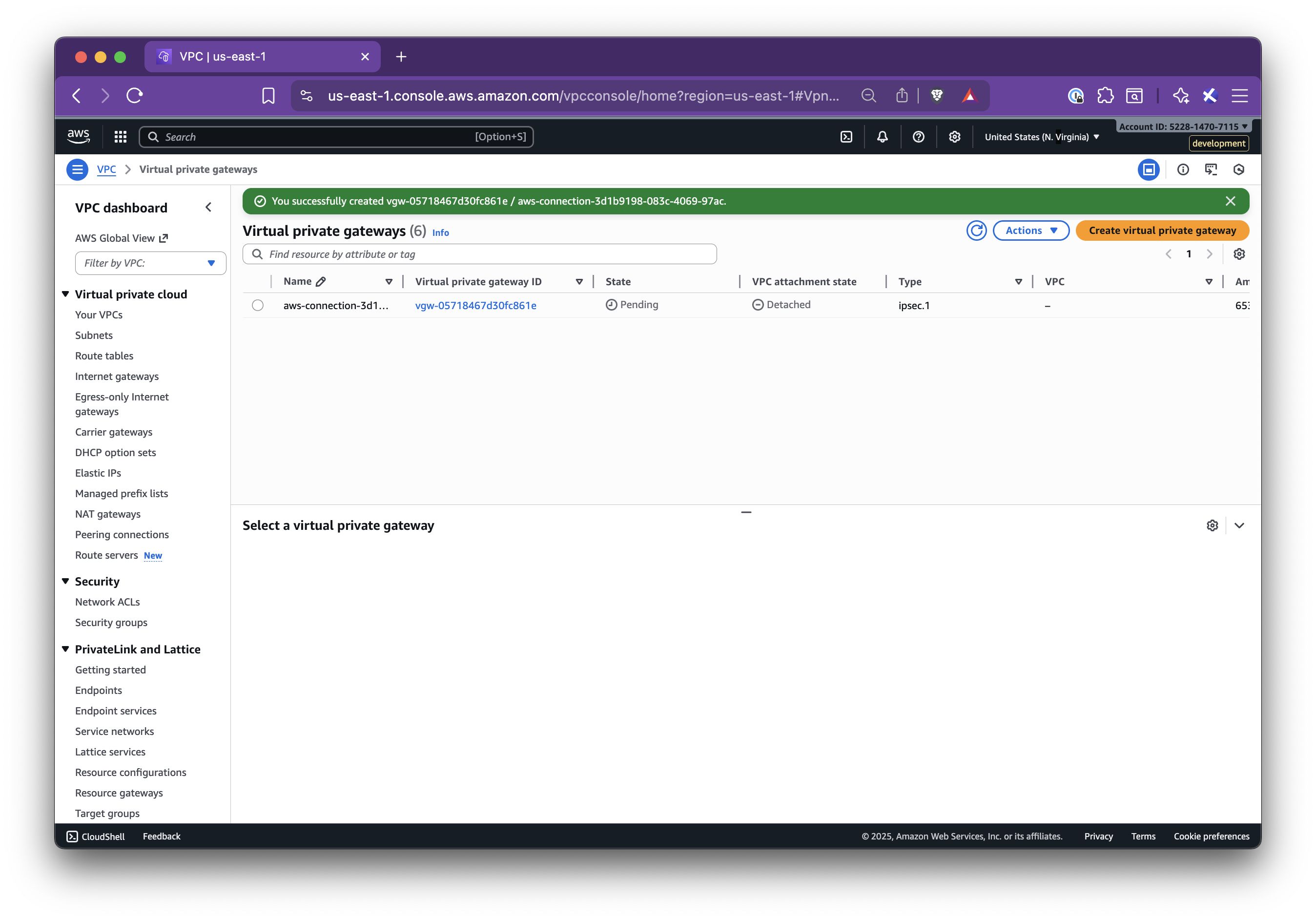

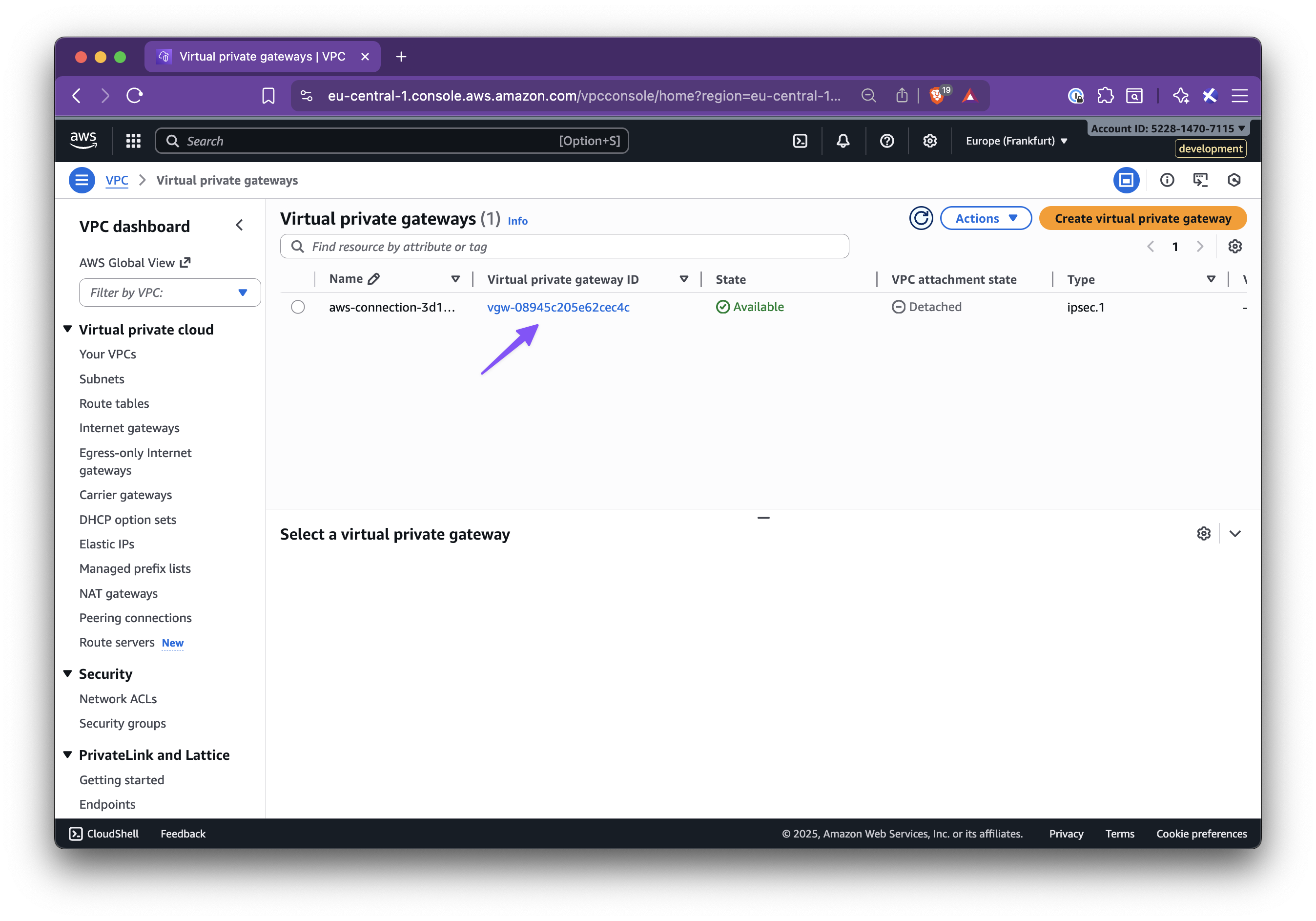

### Step 4.2: Create a Virtual Private Gateway (VGW)

1. Go to **AWS VPC → Virtual private gateways**

2. Create a new VGW with:

- **Name tag**: suggested name from the panel

- **ASN**: the AWS-side ASN shown in the panel

3. Click **Create virtual private gateway**

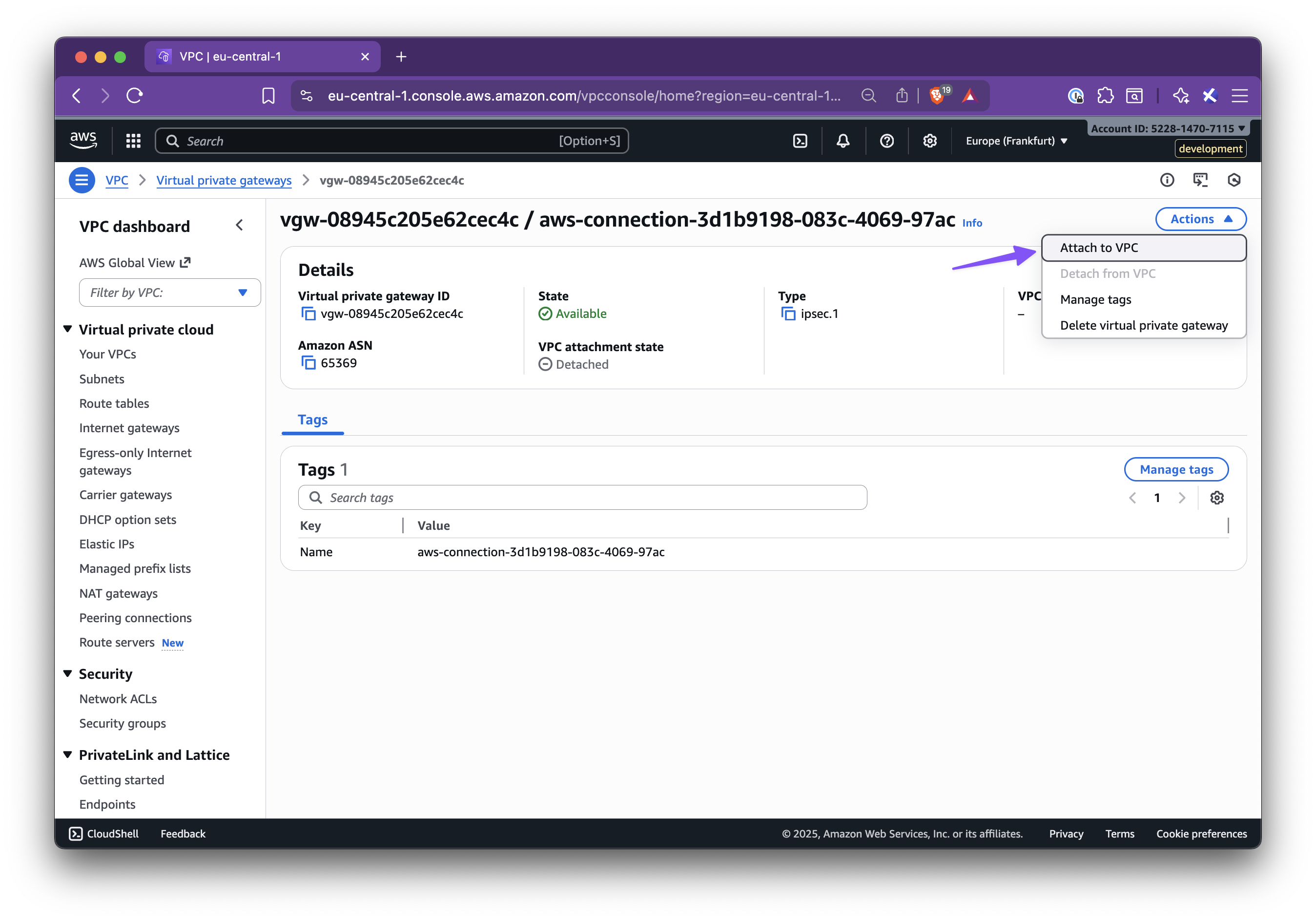

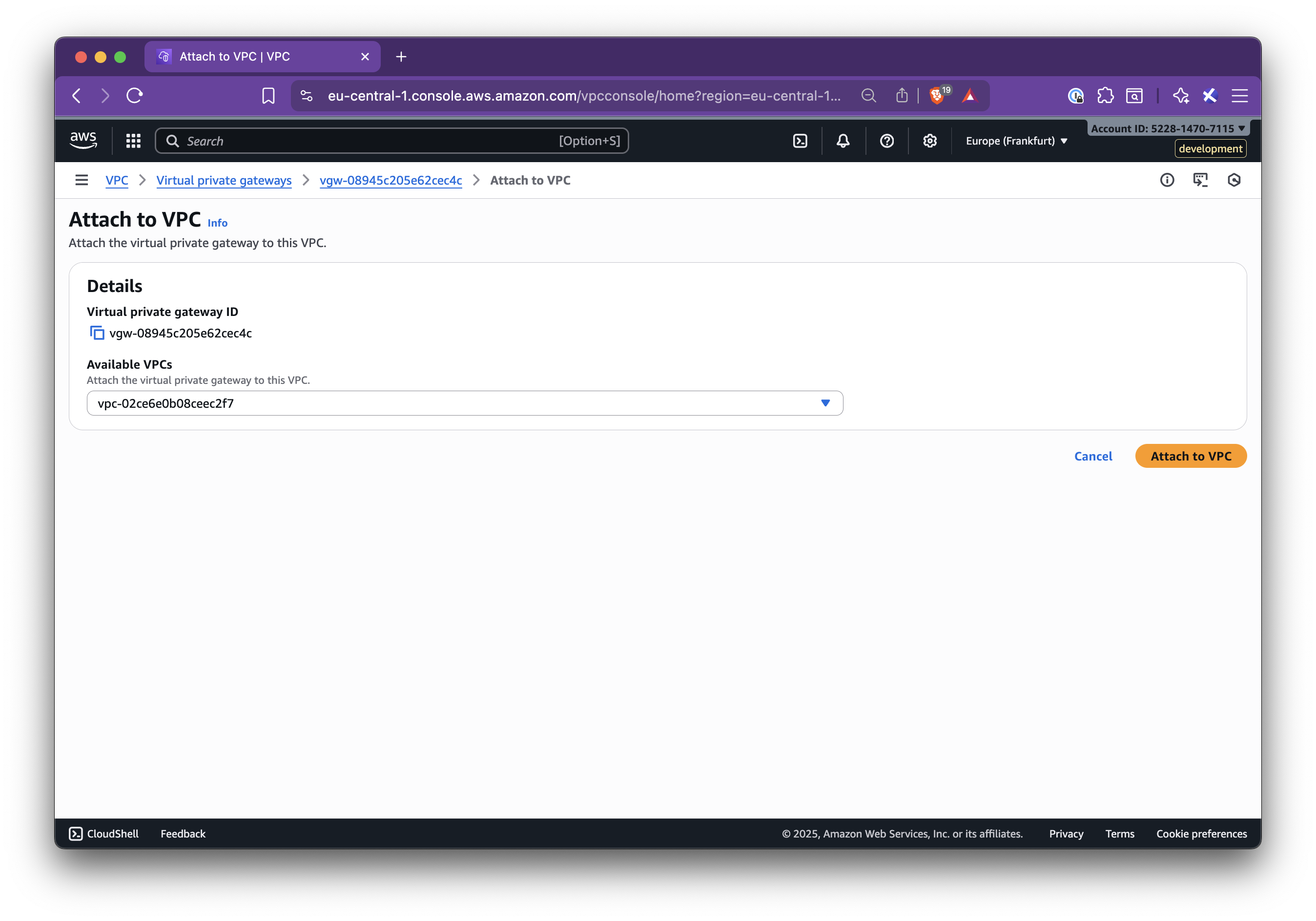

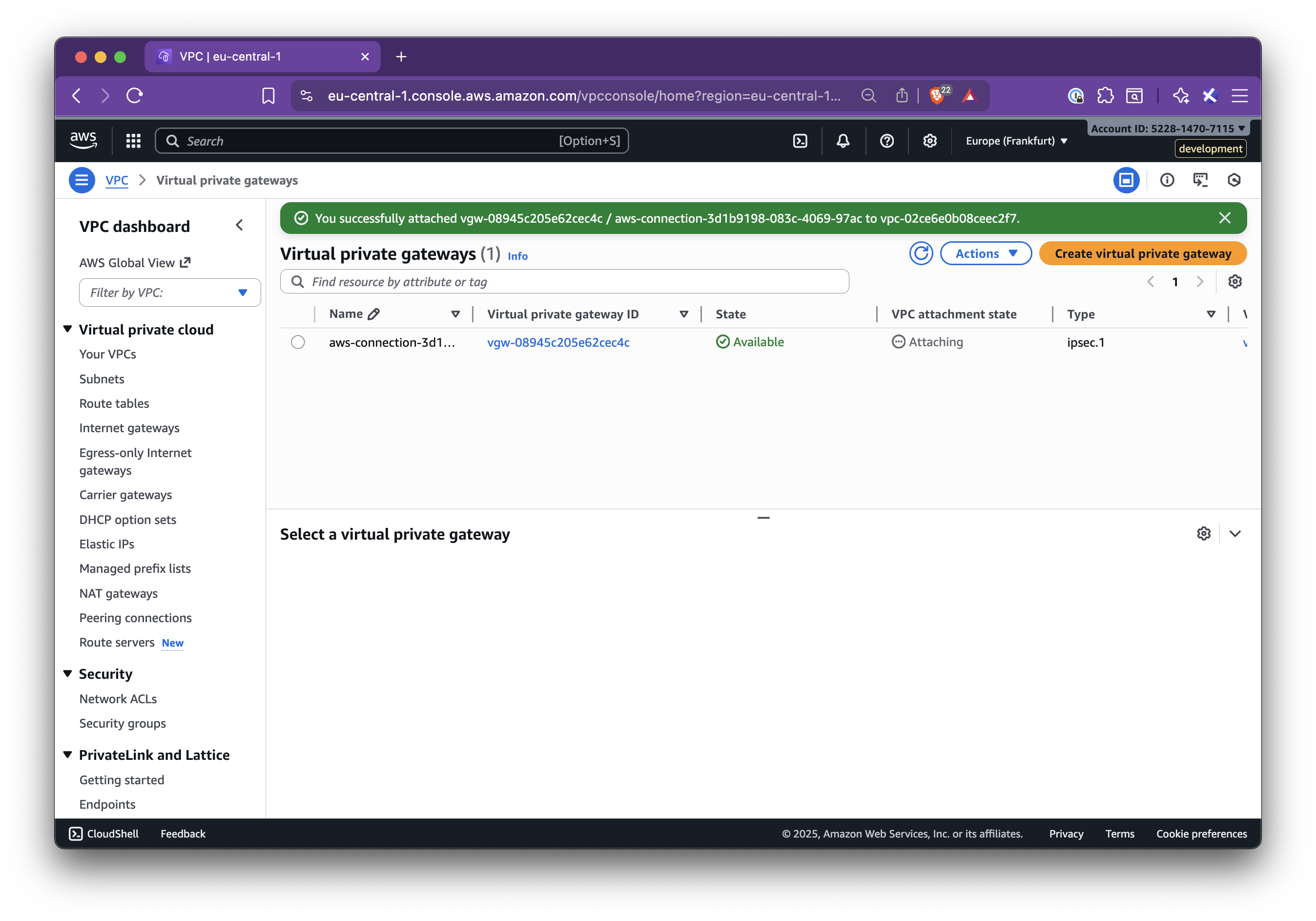

### Step 4.3: Attach the VGW to your VPC

1. In **Virtual private gateways**, select the new VGW

2. Click **Actions → Attach to VPC**

3. Select your target VPC and attach

### Step 4.4: Add the Nirvana VPC CIDR to the AWS route table

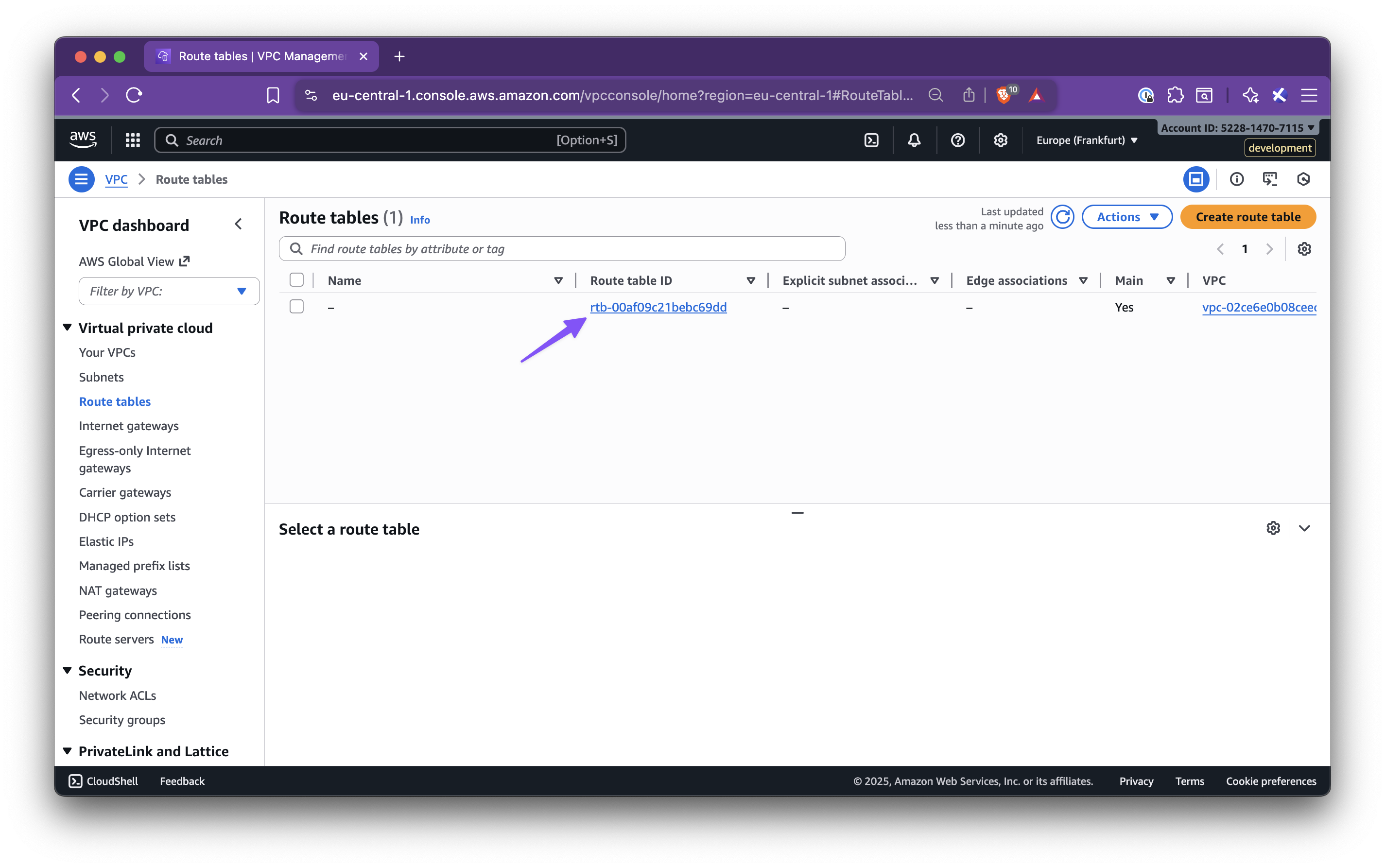

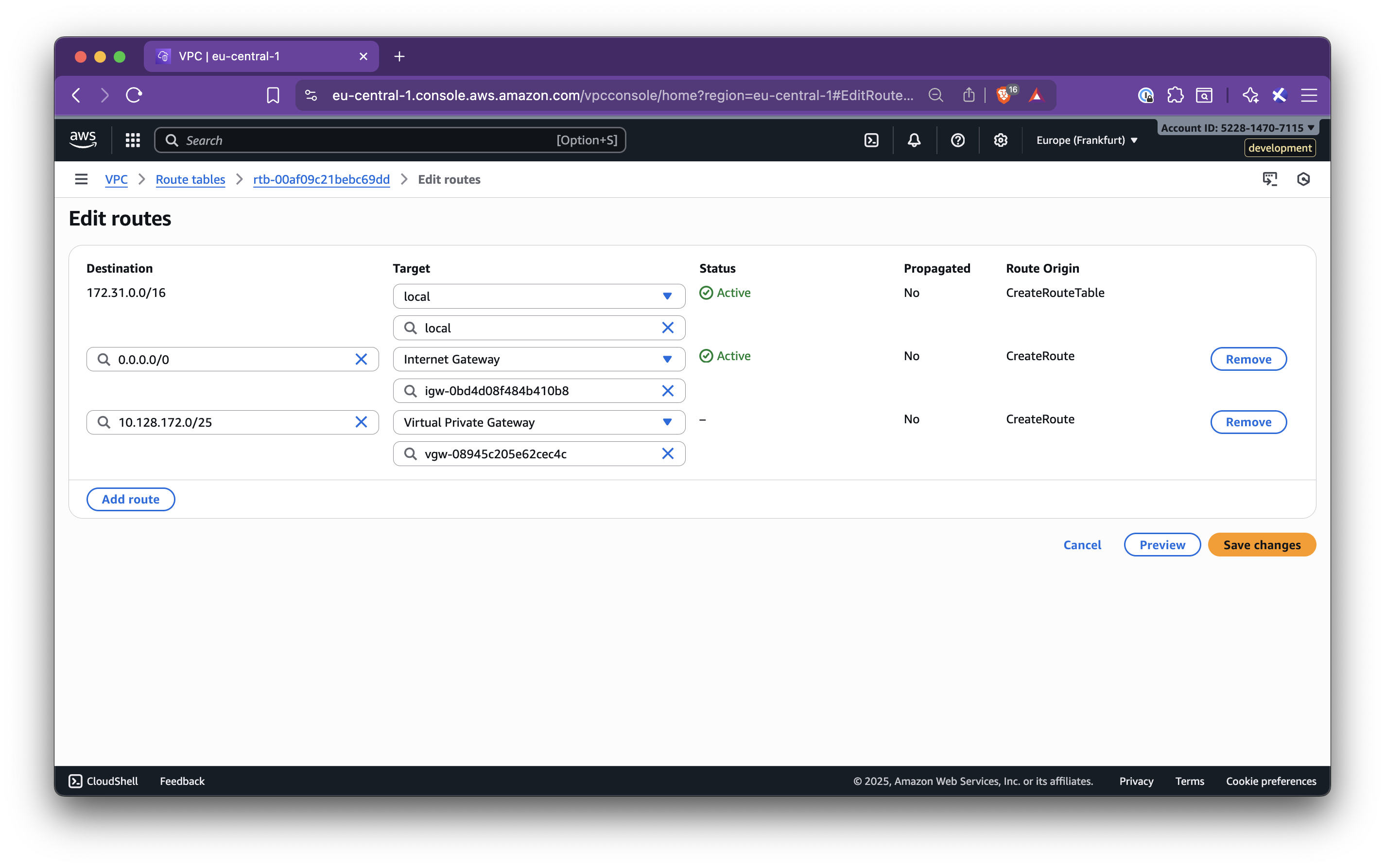

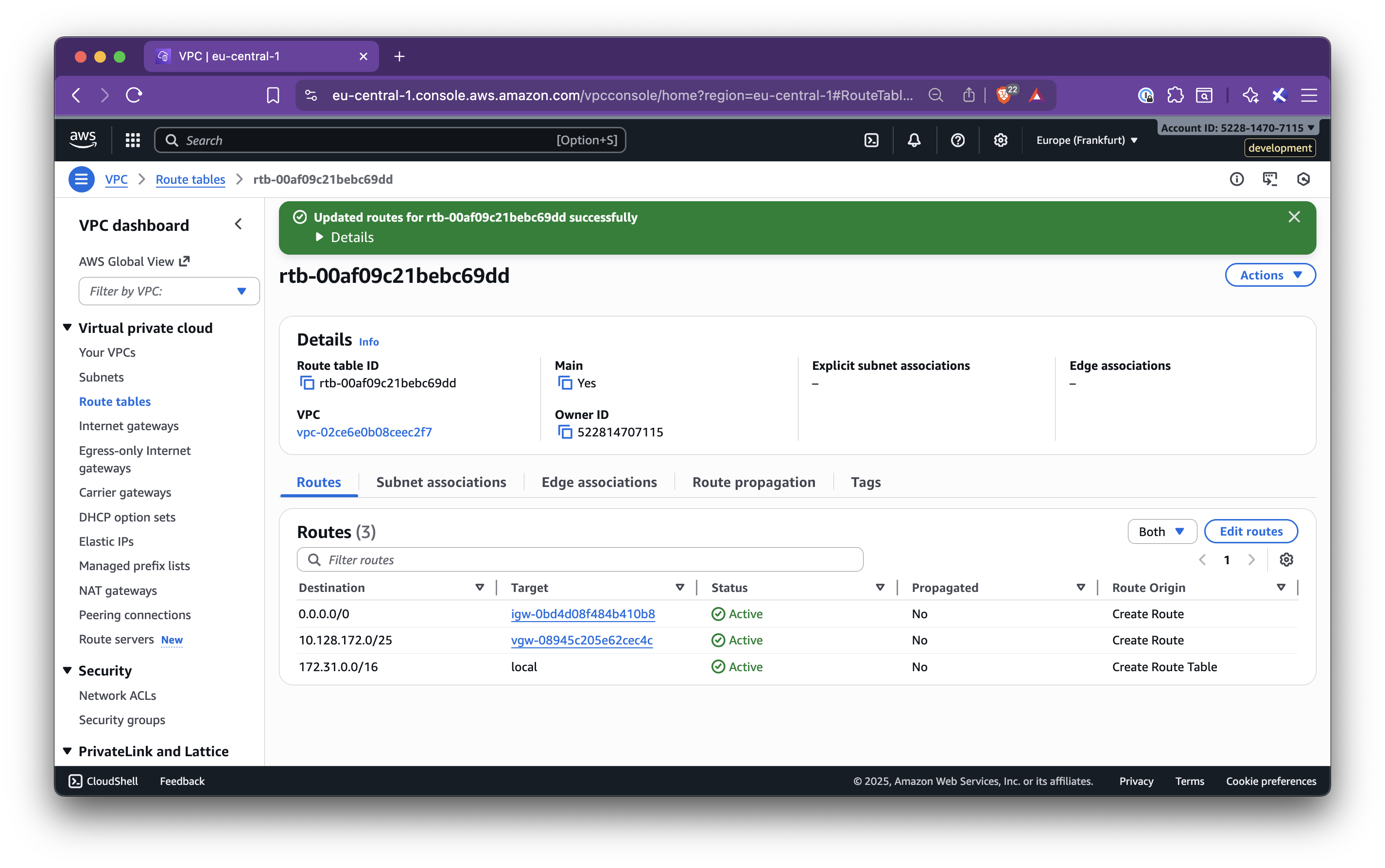

1. Go to **AWS VPC → Route tables**

2. Select the route table for the target VPC

3. Click **Edit routes → Add route**:

- **Destination**: Nirvana VPC CIDR

- **Target**: the VGW from Step 4.2

4. Save changes

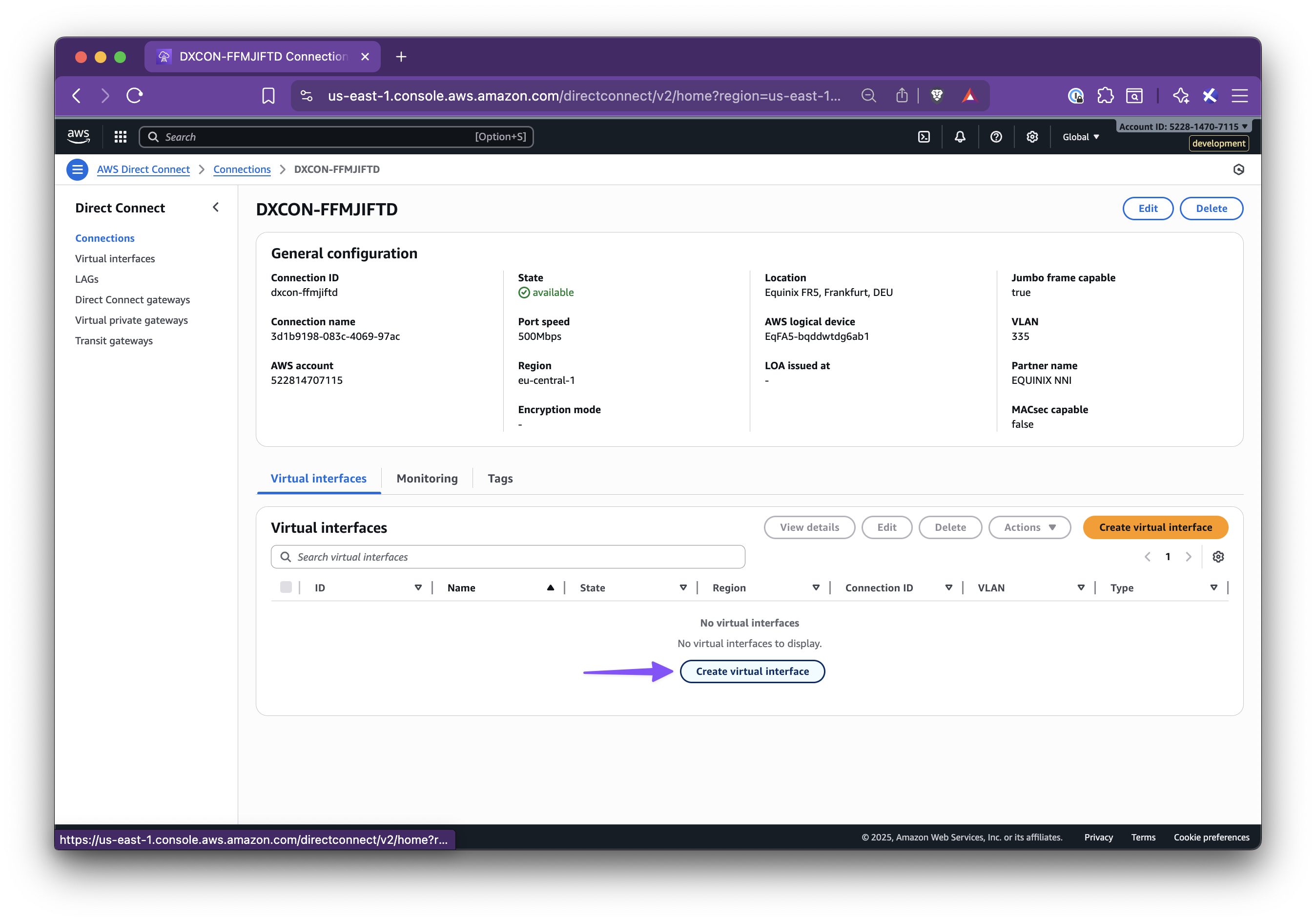

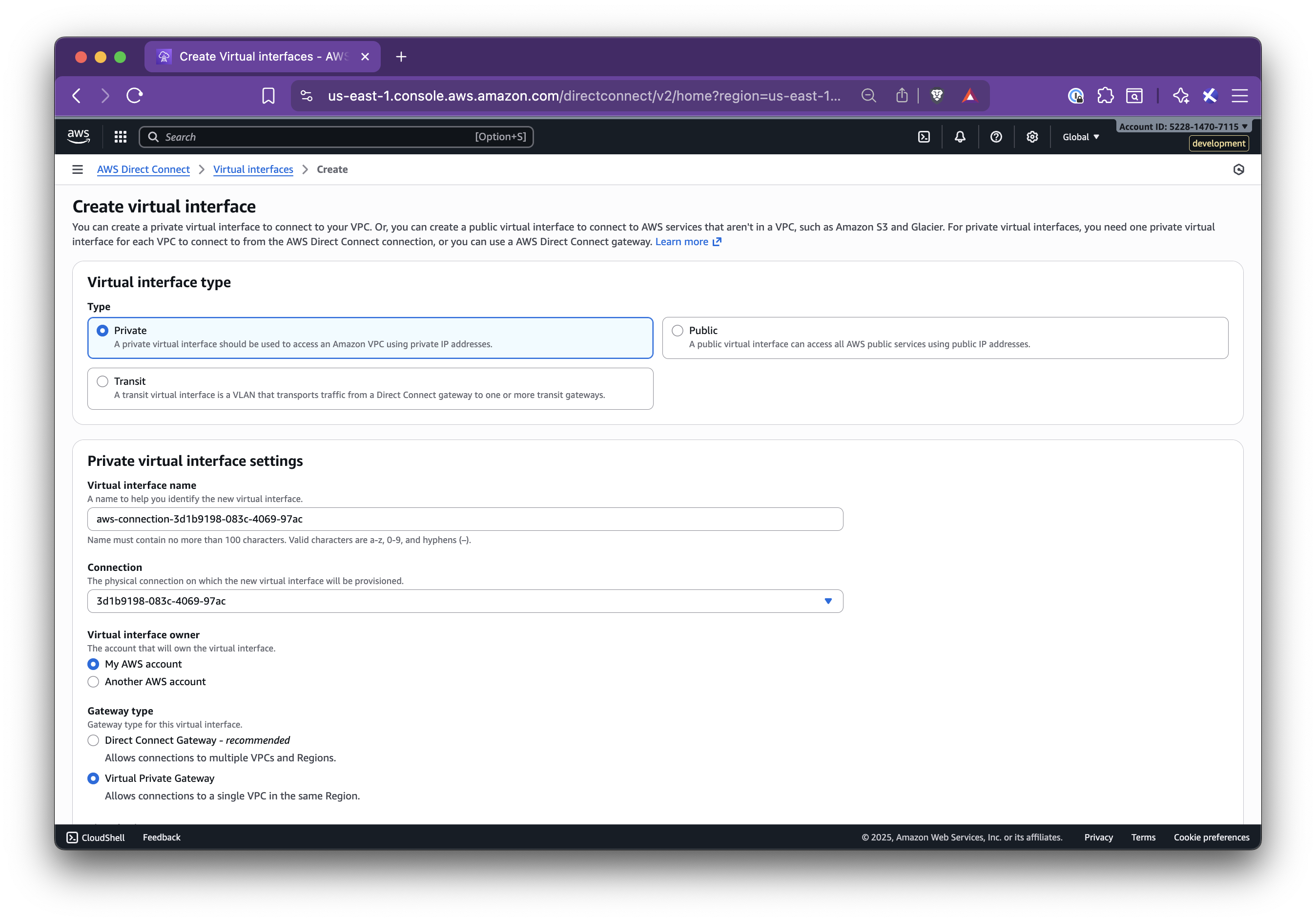

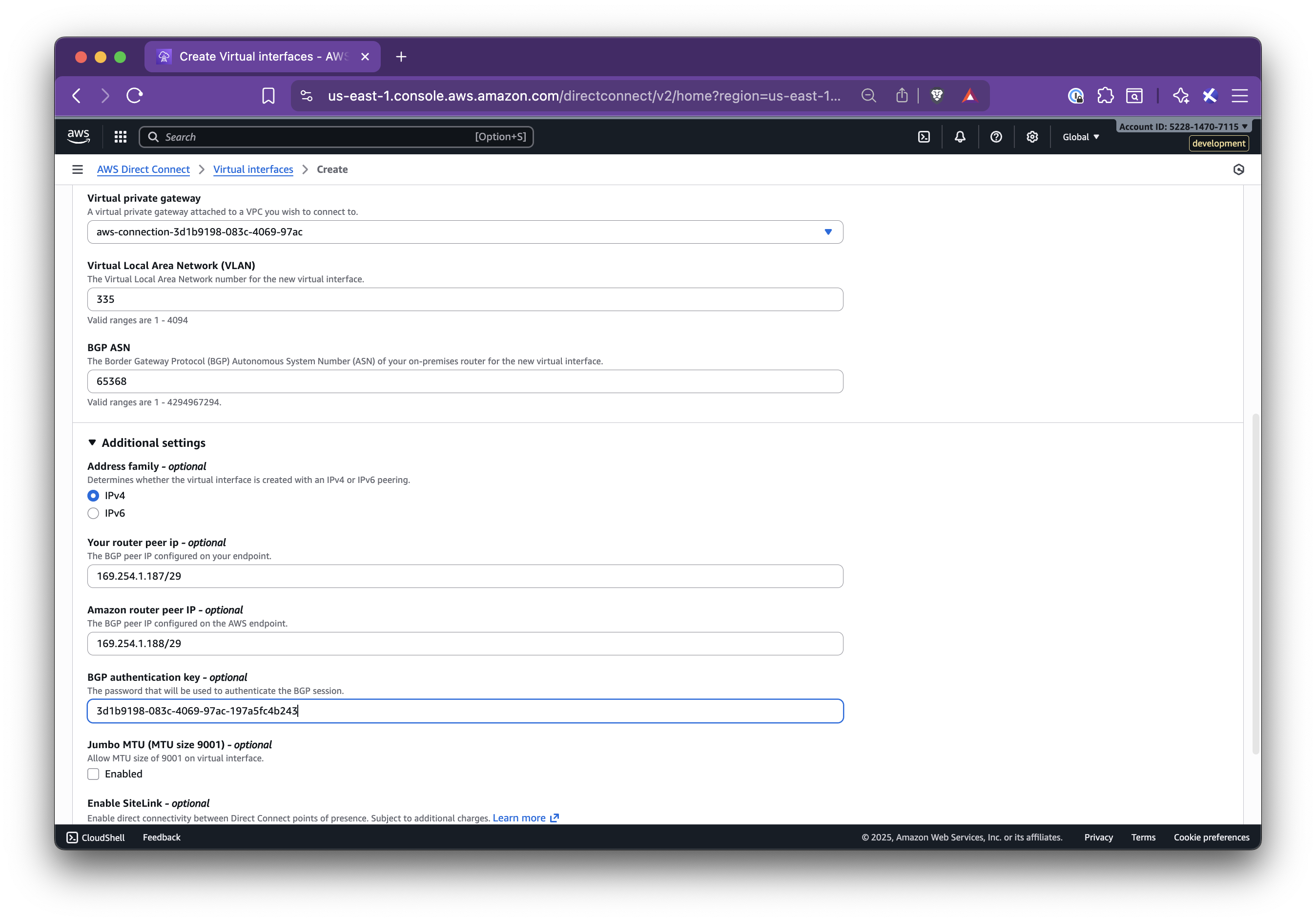

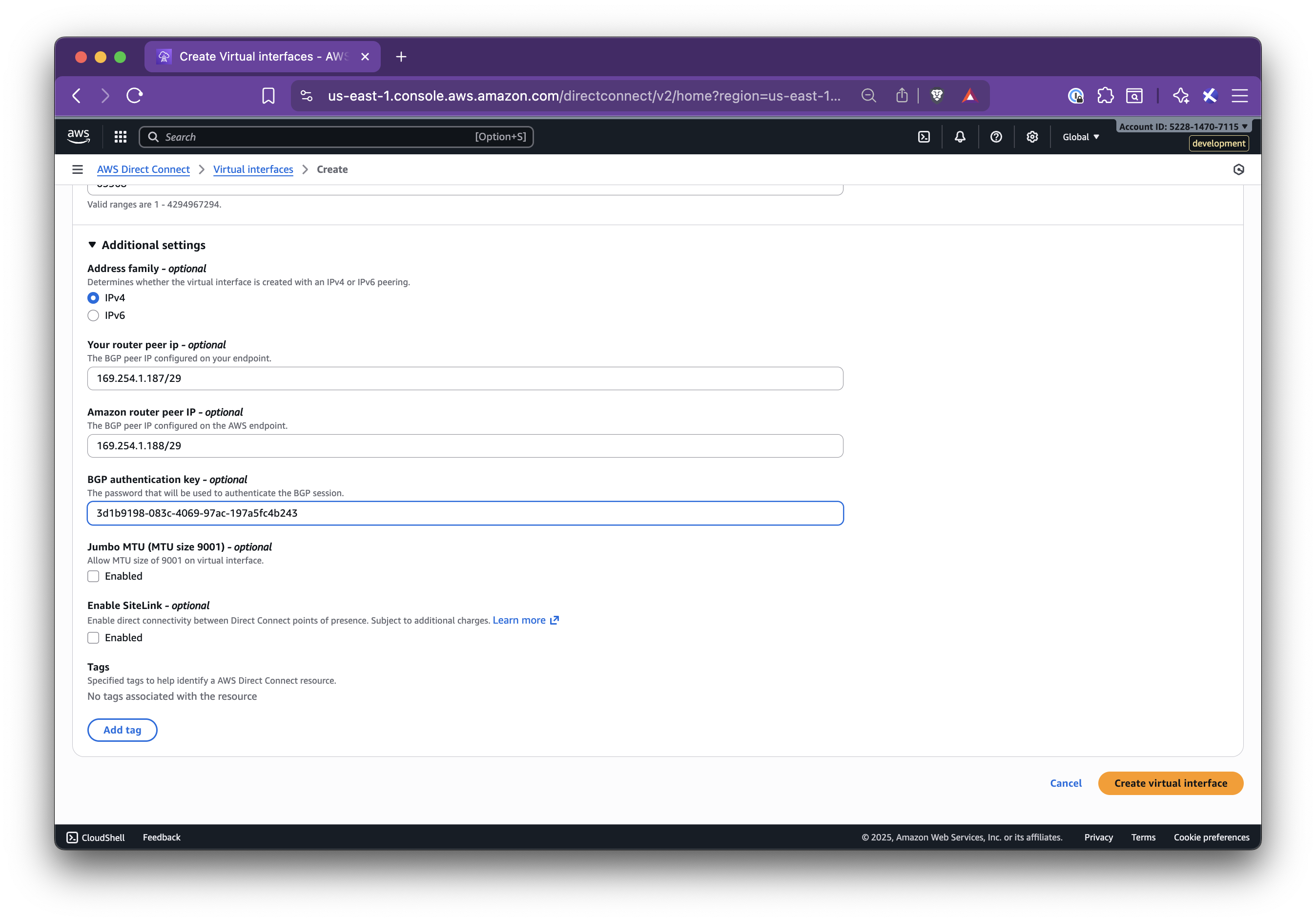

### Step 4.5: Create a Private Virtual Interface (VIF)

1. Go to **AWS Direct Connect → Connections**

2. Select the connection and click **Create virtual interface**

3. Configure the interface:

- **Type**: Private

- **Gateway type**: Virtual Private Gateway

- **Virtual interface name**: suggested from the panel

- **Virtual interface owner**: My AWS Account

- **Virtual Private Gateway**: the VGW attached

- **BGP ASN**: from the panel

- **Peer IPs and BGP Auth Key**: exactly as shown in the panel

4. Click **Create interface**

When the VIF is up, BGP will be established and the private link will be active.

## Step 5: Validate Connectivity

1. From an EC2 instance in the AWS VPC, test connectivity to resources in your Nirvana VPC CIDR (for example, by pinging or curling a private service)

2. From a Nirvana VM or Pod, test connectivity back to AWS resources

If connectivity fails, check:

- Route table entries (Nirvana CIDR to VGW)

- VIF status (BGP should be up)

- Security groups and NACLs on both sides

- Router IPs, ASNs, and BGP keys

Once traffic flows in both directions, your private connection between Nirvana and AWS is fully established! For additional support or troubleshooting, you can [reach out to our support team](https://nirvanalabs.io/contact).

:::note

You only need to add the Nirvana VPC CIDR route on the AWS side. The reverse route on Nirvana is handled automatically using the Provider CIDRs entered during connection creation.

:::

## Troubleshooting

- **VIF Down**: Check for incorrect BGP keys, mismatched ASNs, or reversed IP addresses

- **No Connectivity**: Confirm route table entries, security group rules, and that CIDRs are not overlapping

- **Stuck in Creating**: Open connection details and verify all values. Check VIF state in AWS

- **Edit**: Use the menu to edit Provider CIDRs or reopen the Setup steps if needed

---

title: IP Ranges

description: Nirvana Cloud’s IP Ranges

source_url:

html: https://docs.nirvanalabs.io/cloud/networking/ips/

md: https://docs.nirvanalabs.io/cloud/networking/ips/index.md

---

**Last Updated:** January 7, 2025

This page is the definitive source of Nirvana's current IP ranges.

From time-to-time, we will update this page to reflect the latest IP ranges.

## IPv4

Also available as a [IPv4 text list](/docs/cloud/networking/ips-v4.txt).

### us-sea-1

* 86.109.12.0/26

* 86.109.12.64/27

### us-sva-1

* 86.109.11.0/27

* 139.178.90.96/27

* 147.28.180.0/26

### us-sva-2

* 170.23.67.0/24

### us-chi-1

* 86.109.2.64/26

### us-wdc-1

* 147.28.163.0/26

* 147.28.228.224/27

### eu-frk-1

* 147.28.184.224/28

* 147.28.185.128/26

### ap-sin-1

* 147.28.178.128/26

### ap-tyo-1

* 136.144.52.128/26

---

title: Site-to-Site Mesh

source_url:

html: https://docs.nirvanalabs.io/cloud/networking/site-to-site-mesh/

md: https://docs.nirvanalabs.io/cloud/networking/site-to-site-mesh/index.md

---

Nirvana Cloud's Site-to-Site Mesh is a solution designed to establish secure, high-performance network connections across multiple locations. By leveraging WireGuard, a mesh network is created to allow direct communication between sites.

The Site-to-Site Mesh system offers a seamless networking experience, enabling resources across multiple sites to communicate as though they are in a single local network. This is particularly beneficial when managing geographically dispersed server locations or data centers as it allows for easy synchronization and sharing of data across these points.

In addition to securely and efficiently connecting multiple sites, the Site-to-Site Mesh offers the following advantages:

* **Performance**: The implementation of WireGuard offers high-speed secure connections, making the Site-to-Site Mesh suitable for substantial data transfers between various locations.

* **High Security**: With a mesh network, the communications between each location are securely encrypted, offering a more secure mode of communication compared to conventional methods.

* **Enhanced Reliability**: The use of WireGuard ensures that the Site-to-Site Mesh offers exceptional stability, maintaining reliable and available connections essential for modern, cloud-based operations.

Nirvana Cloud’s Site-to-Site Mesh solution provides a convenient way for businesses to meet multi-site networking requirements. This feature can facilitate reliable, secure, and high-performing communication channels across networks of diverse sizes and distribution.

---

title: Overview

source_url:

html: https://docs.nirvanalabs.io/cloud/networking/vpcs/

md: https://docs.nirvanalabs.io/cloud/networking/vpcs/index.md

---

A Virtual Private Cloud (VPC) is a digital network reminiscent of a conventional network managed in a physical data center.

### Subnets

A subnet denotes a spectrum of IP addresses in your VPC, designed to be user-friendly and region-centric. A single-subnet model per VPC simplifies VPC management and adoption.

### Public IP Addresses

Instances that need to connect to the internet or be accessed from the internet will need a public IP address. Within the cloud setup, users are offered the benefit of allocating a static public IP address to their instances. Unlike dynamic IP addresses, a static public IP address does not change due to stop/start instances. This constancy ensures stable, easy, and more dependable access to applications or services running on the specific instance.

### Firewall Rules

In the architecture of a VPC, firewall rules are pivotal for maintaining data security. These rules offer stateless filtering controls that govern whether network traffic should be allowed or denied. These permissions or denials are based on criteria like the IP protocol, source IP, and designated port numbers.

Firewall rules help encrypt all traffic that enters or exits the VPC, strengthening protection against unapproved access and potential data breaches. They also ensure compliance with all necessary organizational policies, regulations, and requirements, increasing the reliability and trustworthiness of the virtual environment.

It's important to note that the network within a VPC is engineered in a way that allows all virtual machines to automatically communicate with each other. For connections between VMs outside the VPC or with the public internet, specific destination IPs and required ports must be manually defined within the VPC security list for the appropriate routing and connection.

---

title: FAQ

source_url:

html: https://docs.nirvanalabs.io/cloud/networking/vpcs/faq/

md: https://docs.nirvanalabs.io/cloud/networking/vpcs/faq/index.md

---

---

title: Firewall Rules

source_url:

html: https://docs.nirvanalabs.io/cloud/networking/vpcs/firewall-rules/

md: https://docs.nirvanalabs.io/cloud/networking/vpcs/firewall-rules/index.md

---

Firewall rules are used to control inbound traffic to your network, thus ensuring the security and integrity of your application or service by defining which kind of traffic is allowed and from where.

---

title: Nirvana Kubernetes Service (NKS)

source_url:

html: https://docs.nirvanalabs.io/cloud/nks/

md: https://docs.nirvanalabs.io/cloud/nks/index.md

---

Nirvana Kubernetes Service (NKS) is a fully managed Kubernetes service designed to streamline the deployment and management of containerized applications on Nirvana Cloud. By leveraging NKS, organizations can efficiently handle the complexities of Kubernetes while focusing on their core applications and services.

**Importance of Kubernetes in the Web3 Industry**

Kubernetes is essential in the Web3 industry, particularly for the deployment of decentralized applications (dApps), data ingestion pipelines, decentralized exchanges (DEXes), and other blockchain-related services. Its capabilities in orchestration, scaling, and management of containerized workloads make it a crucial tool for ensuring the reliability, security, and performance of Web3 applications.

**Web3 Use Cases for NKS**

* Decentralized Finance (DeFi) Platforms: DeFi platforms, such as lending platforms, yield farming, and synthetic asset issuance, leverage NKS for secure, scalable, and reliable infrastructure.

* Non-Fungible Token (NFT) Marketplaces: NFT marketplaces require robust infrastructure to handle large volumes of transactions and data. NKS provides the necessary scalability and reliability.

* Smart Contract Development and Testing: NKS enables the creation of isolated environments for the development and testing of smart contracts. These environments can be easily replicated and scaled.

* Decentralized Applications (dApps): Deploy and manage dApps efficiently using NKS, benefiting from its scalability and security features.

* Blockchain Data Analytics: Analyzing blockchain data requires processing large datasets, which can be efficiently managed using NKS. NKS can host analytics tools and data pipelines to process and analyze blockchain data.

**Why choose NKS?**

* Fully Managed: NKS is a fully managed Kubernetes service where the Nirvana Labs team handles all aspects of cluster creation, upgrades, and maintenance. This allows customers to concentrate on building and deploying their applications without the need to manage the underlying infrastructure.

* Purpose-Built for Web3: NKS is specifically designed for Web3 applications. Built on Nirvana Cloud, it provides a secure and scalable environment tailored to the unique requirements of Web3 services, ensuring optimal performance and reliability.

* Scalable: NKS offers a highly scalable platform that can adapt to the changing needs of your business. Customers can easily scale their clusters by adding or removing worker nodes or pools as their resource requirements evolve.

**NKS Components**

* Clusters: A cluster is a fundamental unit in Kubernetes, consisting of a set of nodes that run containerized applications. Each cluster includes a controller node that manages the cluster operations and worker nodes that execute the applications.

* Controller Nodes: The controller node is responsible for running the Kubernetes control plane, which manages the cluster and schedules applications on the worker nodes. This node is fully managed by the Nirvana Team, and customers are not billed for it.

* Worker Node Pools: Worker node pools are groups of worker nodes with identical configurations. These pools enable customers to organize their worker nodes according to specific resource requirements, facilitating efficient management and scaling.

* Worker Nodes: Worker nodes are the nodes within a cluster that run the containerized applications. Managed by the controller node, these worker nodes are part of a worker node pool. Customers are billed for the resources consumed by the worker nodes, allowing for cost-effective scaling based on actual usage.

---

title: Clusters

source_url:

html: https://docs.nirvanalabs.io/cloud/nks/clusters/

md: https://docs.nirvanalabs.io/cloud/nks/clusters/index.md

---

Kubernetes clusters are widely used in the cloud industry to deploy and manage containerized applications. This removes the need for customers to manage the underlying infrastructure, allowing them to focus on building and deploying their applications.

**Cluster Creation**: Customers can create clusters with just a few clicks through the Nirvana Cloud Dashboard or API. This streamlined process reduces the complexity and time associated with setting up Kubernetes clusters.

**Configuration Options**: When creating a cluster, customers have the flexibility to choose various settings to match their specific requirements:

* Region Selection: Customers can select the geographic region where the cluster will be deployed, ensuring proximity to their workloads and compliance with regional regulations.

* Worker Node Pool Configuration: Customers can define the configuration for worker node pools, including the amount of CPU, RAM, and storage of the worker nodes.

* Number of Worker Nodes: Customers can specify the initial number of worker nodes in each pool, allowing them to start with the resources they need and scale up or down as required.

* Kubernetes Version: Customers can select the desired Kubernetes version, ensuring compatibility with their applications and taking advantage of the latest features and security updates.

* Additional Settings: Other settings such as network policies, storage options, and access controls can be configured to meet the specific needs of the applications and organizational policies.

**Scalability**: NKS allows customers to easily scale their clusters by adding or removing worker nodes or entire node pools based on their resource requirements. This dynamic scaling ensures that the cluster can handle varying workloads efficiently without over-provisioning resources.

**Managed Operations**: The Nirvana Team handles the underlying operations and maintenance of the controller nodes which are the brains of the cluster. This fully managed approach ensures that the clusters are always running optimally and securely, with minimal intervention from the customer.

**Deployment Ease**: Deploying applications on NKS clusters is straightforward. Customers can use Kubernetes manifests, Helm charts, or other deployment tools to manage their applications. NKS integrates seamlessly with CI/CD pipelines, enabling automated deployments and updates.

By leveraging NKS for their Kubernetes clusters, customers can achieve a high degree of operational efficiency, scalability, and reliability, allowing them to focus on innovation and delivering value to their users.

---

title: Controller Nodes

source_url:

html: https://docs.nirvanalabs.io/cloud/nks/controller-nodes/

md: https://docs.nirvanalabs.io/cloud/nks/controller-nodes/index.md

---

Controller nodes are the brain of the Kubernetes cluster, performing critical functions that ensure the cluster operates efficiently and reliably.

**Key Functions of Controller Nodes**

* Kubernetes Control Plane: Controller nodes run the Kubernetes control plane, which manages the overall state of the cluster and schedules applications on worker nodes. This includes tasks such as load balancing, scaling, and maintaining the desired state of the applications.

* etcd Database: The controller nodes run the etcd database, which is a distributed key-value store used to persist the cluster's state. This database stores all cluster configuration data and ensures consistency across the cluster.

* Kubernetes API: The Kubernetes API server runs on the controller nodes, providing a RESTful interface for interacting with the cluster. This allows customers to use tools like kubectl, Helm, and other Kubernetes tooling to manage their applications and cluster resources.

**Managed with ❤️ by the Nirvana Team**

The controller nodes are completely managed by the Nirvana Team. This means that Nirvana Cloud handles all aspects of their operation, including maintenance, updates, and monitoring. Customers do not need to worry about the complexities of managing the control plane infrastructure.

Customers are not billed for controller nodes, which are provided as part of the NKS service. This makes it cost-effective for customers to leverage the full capabilities of Kubernetes without incurring additional costs for control plane management.

**Configuration Options**

* Single Controller Node: Customers can choose a single controller node configuration for their cluster. This option is suitable for development, testing, and smaller production environments where high availability is not a critical requirement.

* Highly Available Configuration: For production environments that require high availability and fault tolerance, customers can opt for a highly available controller node configuration. This setup runs three controller nodes, ensuring that the cluster remains operational even if one or two controller nodes fail. This redundancy is crucial for maintaining continuous availability and resilience against failures.

By providing robust and fully managed controller nodes, NKS ensures that customers can focus on deploying and managing their applications with confidence, knowing that the critical aspects of cluster management are handled by the Nirvana Team.

---

title: Node Pools

source_url:

html: https://docs.nirvanalabs.io/cloud/nks/worker-node-pools/

md: https://docs.nirvanalabs.io/cloud/nks/worker-node-pools/index.md

---

Node pools are a set of worker nodes that share the same configuration, enabling efficient management and scaling of resources based on application requirements.

**Key Features of Node Pools**

* Resource Grouping: Node pools allow customers to group worker nodes according to their resource requirements. This organizational strategy ensures that similar types of workloads are run on nodes with appropriate configurations.

* Multiple Configurations: Customers can create multiple node pools with different configurations to support various types of applications within the same cluster. This flexibility ensures optimal resource utilization and performance.

**Configuration and Scalability**

* Cluster Creation: When creating a cluster, customers can specify the worker node pool configuration, including the number of worker nodes in each pool and other relevant settings. This initial setup can be tailored to meet the specific needs of the applications being deployed.

* Dynamic Scaling: Customers can easily scale their clusters by adding or removing worker nodes or entire node pools based on their resource requirements. This dynamic scaling capability allows for efficient resource management and cost control.

**Optimization for Specific Workloads**

* Compute-Intensive Workloads: For applications that require significant computational power, customers can create node pools with high CPU worker nodes. This ensures that compute-intensive workloads have the necessary resources for optimal performance.

* Memory-Intensive Workloads: Applications that require large amounts of memory can be assigned to node pools with high memory worker nodes. This configuration is ideal for workloads such as large-scale data processing or in-memory databases.

* Storage-Intensive Workloads: For stateful applications that require substantial storage, customers can create node pools with nodes that have large storage capacities. This setup ensures that storage-intensive workloads have sufficient space and performance capabilities.

**Simplified Management**

With node pools, customers can manage and scale their clusters efficiently without the need to handle individual worker nodes. This abstraction simplifies cluster management and allows customers to focus on application performance and resource optimization.

By leveraging the capabilities of node pools, customers can ensure that their Kubernetes clusters are optimized for diverse workloads, providing a robust and flexible environment for deploying and managing containerized applications.

---

title: Worker Nodes

source_url:

html: https://docs.nirvanalabs.io/cloud/nks/worker-nodes/

md: https://docs.nirvanalabs.io/cloud/nks/worker-nodes/index.md

---

Worker nodes are the backbone of a Kubernetes cluster, responsible for running the actual workloads. These nodes host the containers that constitute the applications deployed on the cluster.

**Key Functions of Worker Nodes**

* Application Execution: Worker nodes run the containers that make up the applications, handling the actual processing, storage, and networking tasks required by the applications.

* Management by Controller Nodes: Controller nodes oversee worker nodes by scheduling applications to run on them, monitoring their health, and managing their lifecycle. This ensures efficient use of resources and high availability of applications.

**Configuration and Deployment**

* Worker Node Pool Configuration: When creating a cluster, customers define the configuration of worker node pools. This configuration includes several critical settings:

* Instance Type: Specifies the type of virtual machine or physical hardware to be used for the worker nodes, impacting performance and capacity.

* Number of Worker Nodes: Defines the initial number of worker nodes in the pool, determining the cluster's capacity to handle workloads.

* Disk Size: Sets the storage capacity available on each worker node, ensuring sufficient space for the containers and their data.

* Other Settings: Includes additional configurations such as networking, security policies, and resource limits to tailor the nodes to specific application needs.

By carefully configuring worker node pools and leveraging the robust management capabilities of NKS, customers can ensure that their applications run efficiently and reliably on a scalable and flexible Kubernetes infrastructure.